Today we’re excited to share our Twilio integration in Daily Bots, where developers build adaptive conversational voice AI on the world’s leading global real-time infrastructure and open source SDKs.

Daily Bots is architected to give enterprises and developers the flexibility they need as they build adaptive voice AI. Now you can use your Twilio numbers and voice workflows directly with real-time conversational voice AI and LLM workflows powered by Daily Bots.

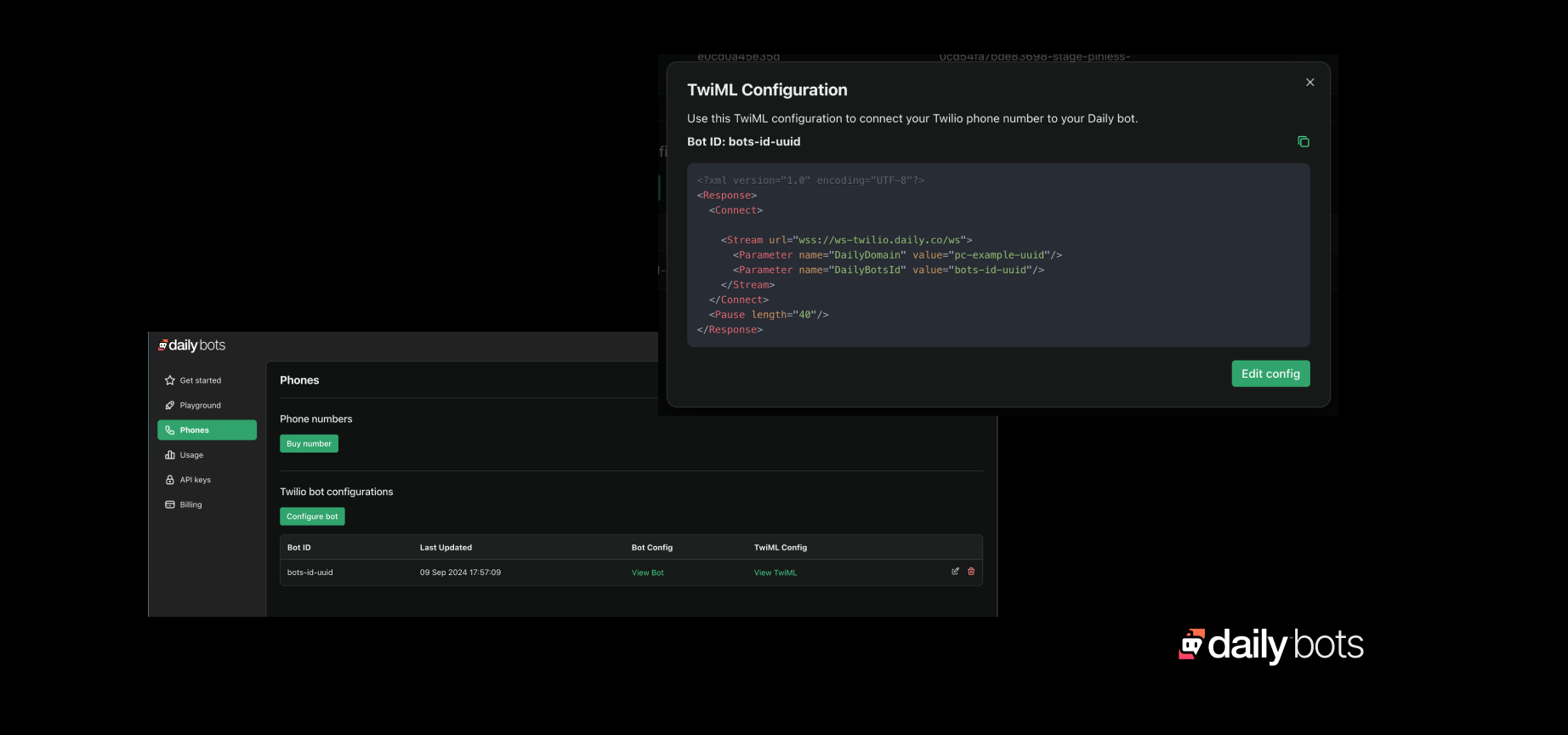

- With this release, Daily Bots natively supports Twilio WebSockets.

- Right in your Daily Bots dashboard (or via REST API), developers can create real-time voice AI agents configured with a TwiML code output.

- Attach the generated TwiML code output to a Twilio Bin and use it in any Twilio workflow such as Twilio Flex, Twilio Studio, and IVR. Support both dial-in and dial-out.

Daily Bots-powered agents are state-of-the-art, talking naturally in conversation, with ultra low latency, as they retrieve structured data using any LLM, for tasks like scheduling appointments, answering questions back-and-forth about a policy, completing intake, and triaging and routing more effectively.

Agents can be spun up and down as needed, to support goals like dynamic, streamlined operations and happier, engaged customers.

Start here, to try it out. Below we discuss real-time AI agents in voice workflows, how Daily Bots enables voice AI, and how the integration works.

Improving telephony workflows

Telephony is a bedrock of customer communications and omnichannel UX. Yet companies face what McKinsey calls a “a perfect storm of challenges,” including increasing call volumes and staffing issues.

Meanwhile, today’s customers are vocal about hold times, phone trees, voice mail, and unhappy experiences.

Over the last year, step function improvements in a suite of AI technologies — spanning orchestration, function calling, LLM and voice models, and more — have enabled agents that can bring AI’s structured data insights into real-time conversation.

Learn how Daily Bots brings state-of-the-art conversational abilities and flexibility:

- Avoid vendor lock in. The Daily Bots hosted offering is built on top of open source client SDKs and Pipecat, the fastest growing Open Source voice AI framework.

- Better customize for enterprise workflows. Daily Bots leverages function calling, tool use, and structured data generation. Integrate with headless knowledge base APIs and existing back-end systems.

- Run on proven infrastructure. We have managed infrastructure at global scale since 2016, with 99.99% uptime across 75 points of presence, around the world with SOC 2 Type 2 certification and HIPAA and GDPR compliance. Daily’s edge network delivers 13ms median first-hop latency to a coverage footprint of 5 billion end users.

- Deploy into your VPCs or private networks as needed. Daily can help you with on premises or VPC infrastructure if your use case requires. Our product and engineering leadership has extensive experience working with customers in high-security contexts.

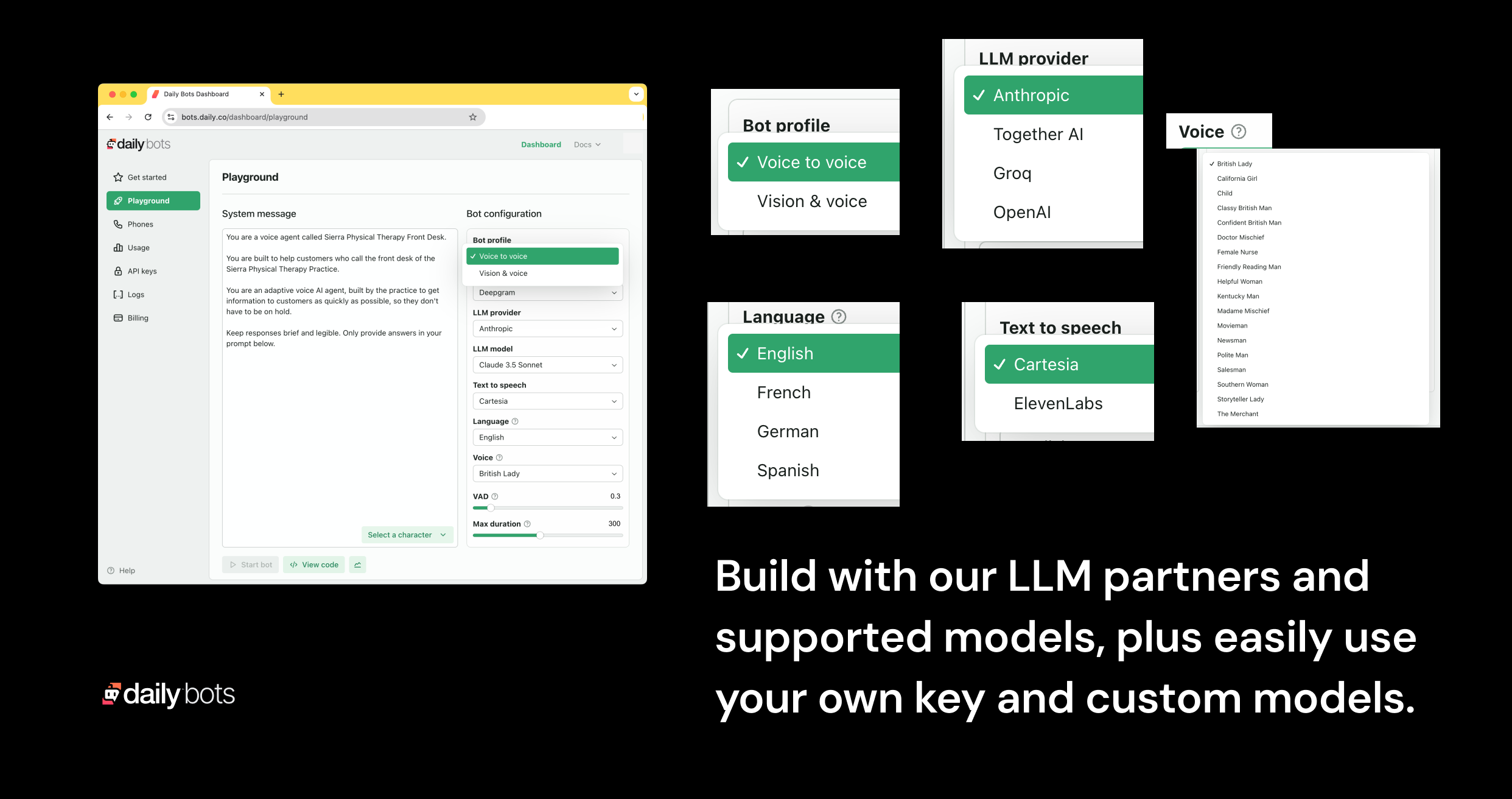

Right in your dashboard, you can build unified voice-to-voice applications with any LLM. Daily’s modular approach provides both a best-in-class developer experience and simple, transparent billing and pricing. Our partners include leading AI model providers including Anthropic, Cartesia, Deepgram, OpenAI and Together AI; or you can bring your own keys and use your preferred models (including custom models).

With this enablement, enterprise customers can flexibly use Daily Bots across their technology stack. Today’s release furthers that goal, allowing you to leverage your existing Twilio Voice assets together with the Daily Bots adaptive voice feature set.

Supporting Twilio Voice Workflows, building on Twilio WebSockets

Twilio is the leader in programmable voice, supporting over 10 million developers from startups to the enterprise. It has developed a complete suite of voice products — like Twilio Flex, Twilio Studio, and hosted Twilio apps — which lets its customers build across use cases like IVR, alerts and notifications, call tracking, and more.

Telephony is integral to voice conversations, and Daily Bots has always supported a full suite of telephone and SIP features. With this release, your customers can dial your Twilio number and talk to a Daily Bot. Use cases we have seen include:

- Business messaging platforms. A platform uses Twilio Studio to provision phone numbers for trades and services. When a customer dials the numbers, instead of being connected to a front desk or getting voice mail, they immediately talk to an AI agent, who can complete scheduling. The agent is able to ask questions in a conversation back-and-forth with the customer. Based on some qualifying questions, the agent can book an appointment immediately and/or route the customer for elevated support.

- Professional services platforms. To help patients achieve better outcomes after care, a provider's office pings them across channels, to check in. While outreach like SMS can remind a patient to call, an AI agent can handle this much more flexibly. The AI agent calls the patient and based on responses in conversation, can flag the patient for further follow up.

- Industries like financial services that build Twilio workflows in conjunction with Google Dialogflow extensively are transitioning previous-generation NLP workflows to AI conversational voice. Today’s LLMs deliver higher customer satisfaction, percentage of calls handled without human intervention, and call deflection metrics, at lower cost than older call center technologies.

You can learn more about how to build all of these things in the Daily Bots docs.

How it works

Daily Bots generates Twilio TwiML code for you. TwiML is the Twilio Markup Language. Developers use TwiML to build Twilio workflows. You can create a Daily Bots voice AI session wherever you use TwiML.

WebRTC and WebSockets infrastructure

Daily Bots supports both WebRTC and WebSockets depending on your use case:

- Daily Bots uses WebSockets for Twilio – and other telephony and SIP – connectivity. Twilio’s WebSockets implementation is robust and delivers good performance for two-way audio streams over server-to-server network connections.

- Daily Bots sessions can also run on top of Daily’s global WebRTC infrastructure. WebRTC provides lower-latency, higher-bandwidth, higher-quality connectivity and is the best protocol for delivering audio directly to end-user devices such as web browsers and mobile phones. Daily Bots sessions can be accessed by Daily WebRTC transport, PSTN telephone dial-in, telephone dial-out, Web browser apps, and native apps on iOS, Android, and other platforms. WebRTC also supports video and multi-participant real-time AI applications.

Developer Workflow

To set up a Twilio + Daily Bots integration:

- Configure a Daily Bot using the Daily Bots dashboard. This generates both a Daily Bot configuration and TwiML code.

- Open your Twilio console and create a TwiML Bin. Save the bin and assign it to a phone number in the Twilio console.

- You can create a Daily Bot session in any of your Twilio workflows, using this TwiML code.

For dial-in, calling the Twilio number automatically routes to the corresponding Daily Bot.

For dial-out, use the Twilio REST API or Twilio CLI to call the target phone number. Learn more here.

Get Started

Given the centrality of voice, leveraging the benefits of real-time conversational voice AI is a key consideration for our enterprise customers and developer community.

Get started here. Below are a few more links if you'd like to dive in more. We're excited to partner with you.

- Sign up, $10 in credits, https://bots.daily.co

- Visit the developer docs

- Contact sales for enterprise needs

- Watch a video on how to configure Daily Bots and Twilio Voice

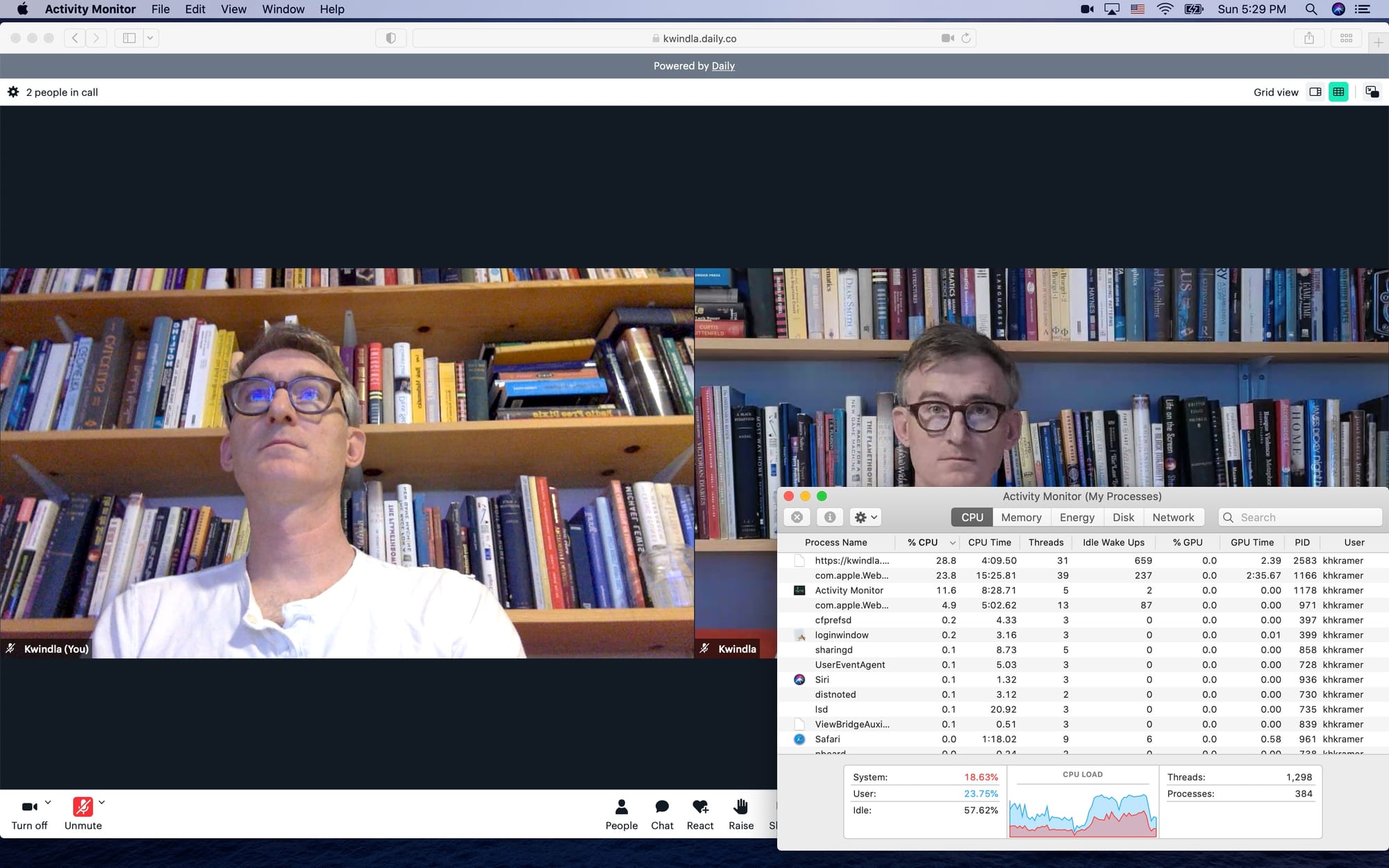

Follow us on social. Our technical cofounder Kwindla regularly posts about real-time conversational voice AI. You can follow Daily on LinkedIn and Twitter.

]]>

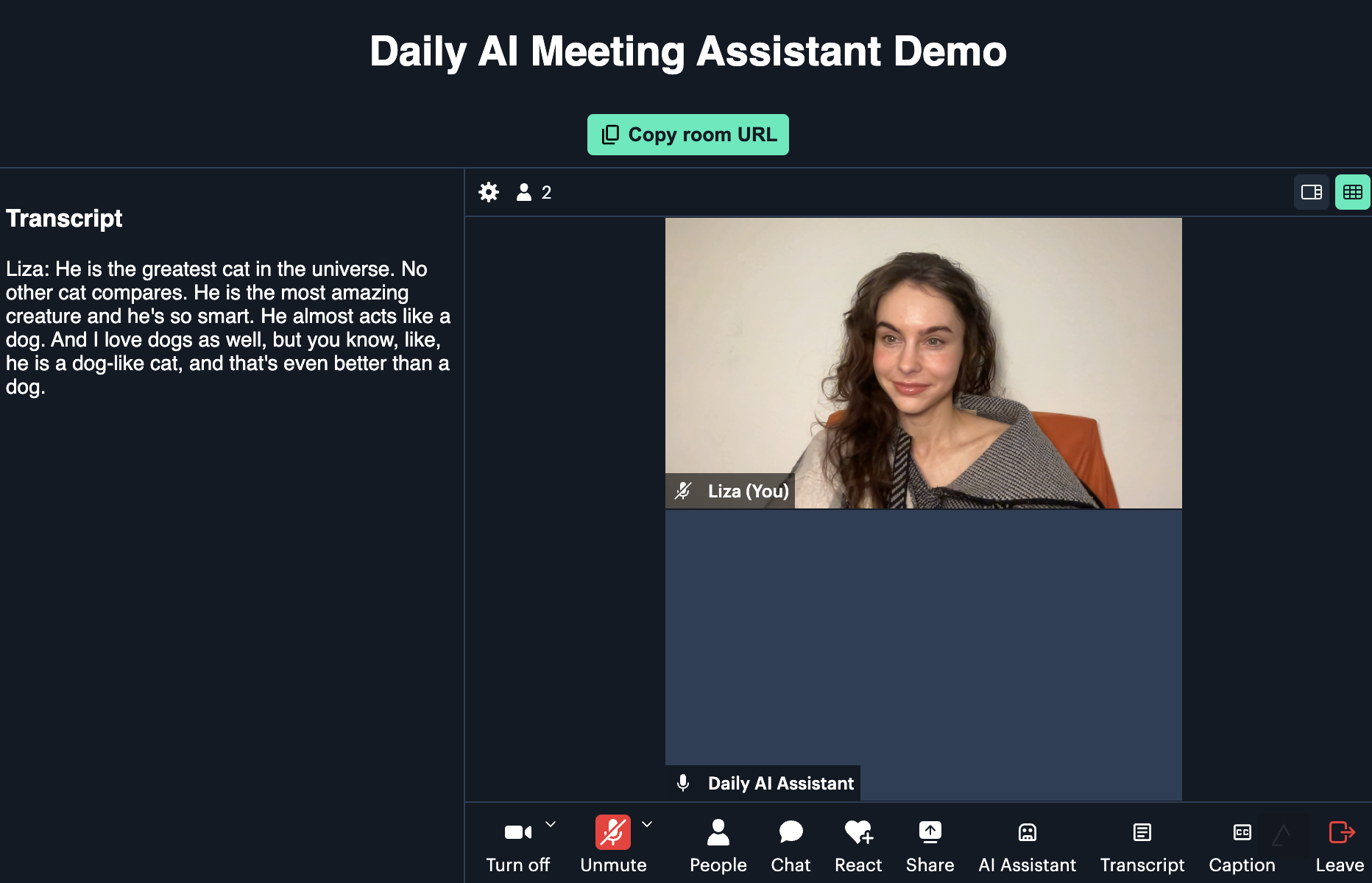

Today we’re sharing Daily Bots, a hosted AI bots platform.

Developers can ship voice-to-voice AI with any LLM; build with Open Source SDKs; and run on Daily’s real-time global infrastructure:

- Create AI agents that talk naturally.

- Design voice-to-voice AI flexibly, with leading commercial and open models. We’ve partnered with Anthropic, Cartesia, Deepgram, and Together AI. You can also use any LLM that supports OpenAI-compatible APIs.

- Build ultra low latency experiences for desktop, mobile, and telephone.

- Use the leading Open Source tooling for voice-to-voice and multimodal video AI. Daily Bots implements the RTVI standard for real-time inference, and is built on the Pipecat server-side framework.

- Launch quickly and scale on Daily’s global WebRTC infrastructure.

In this post, we’ll talk about why we built Daily Bots and what it does; how we're excited to work with our partners; and also some of the fun demos you can play with.

If you’d like to jump straight in, here are docs and demos — our playground demo with configurable LLMs; function calling demo and vision with Anthropic; and iOS and Android. Sign up here (with a $10 credit during launch week).

Why Daily Bots

At Daily, we’ve been building real-time audio and video infrastructure since 2016. Our customers have been developers building conversational experiences – it started with people talking to each other.

Now, with generative AI, the definition of conversational experiences has expanded. Today’s Large Language Models are very good at open-ended conversations. They can follow scripts and perform multi-step tasks. They can call out to external systems and APIs.

Voice-driven LLM workflows are starting to have a big impact in healthcare and education. LLMs are improving the customer support experience and enterprise workflows. Virtual characters will transform video games and entertainment. And this is just the start of the impact of AI.

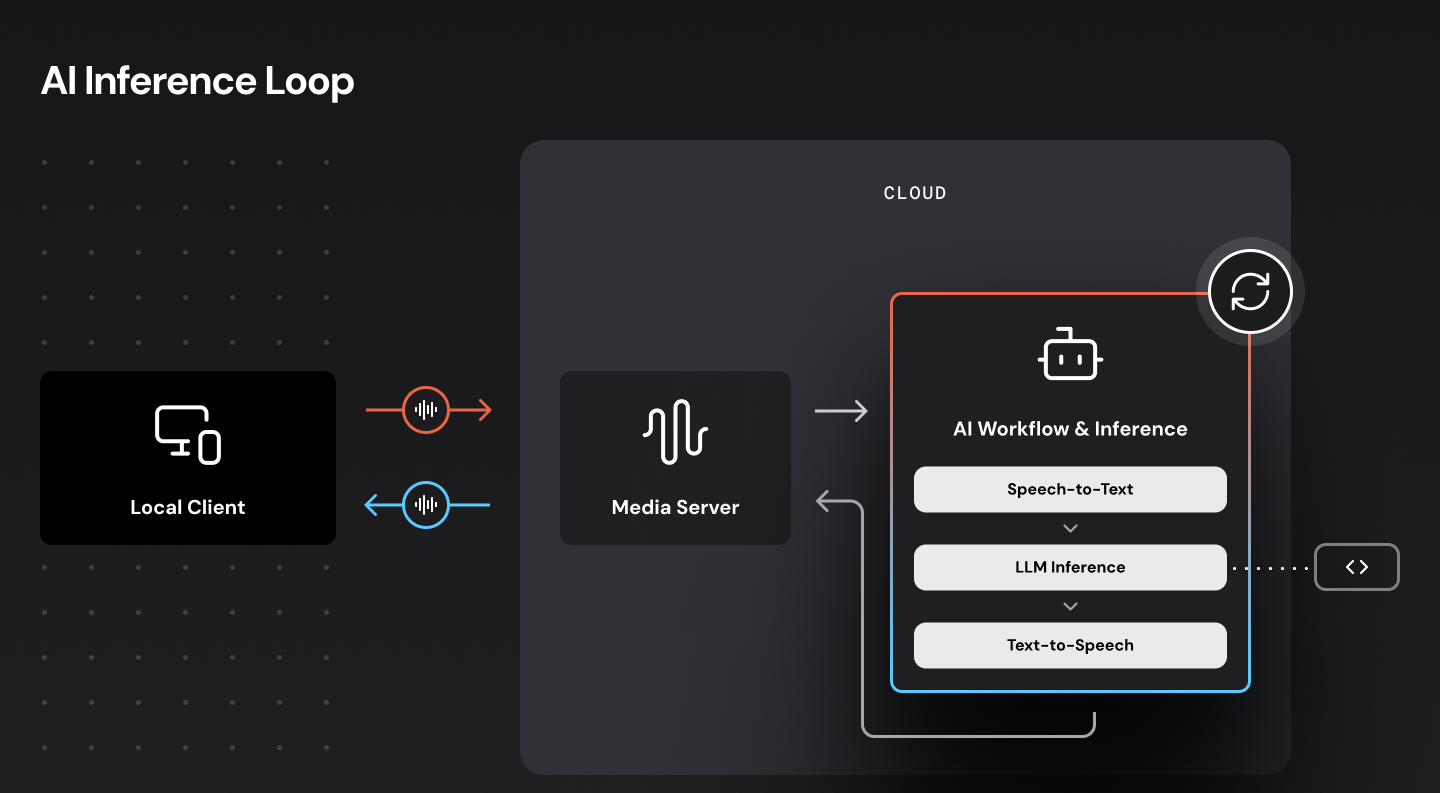

Building experiences in which humans can have useful, natural, real-time conversations with AI models involves:

- Choosing and writing code for the right generative AI models for your specific use case.

- Orchestrating the human -> AI -> human conversation loop, incorporating prompting, state management, data flow between models, and calling out to external systems.

- Standing up both audio/video infrastructure and AI/orchestration infrastructure – service discovery, routing, autoscaling, fault tolerance, observability.

- Having good client SDKs for all the platforms you need to support.

Over the past year and a half, as we’ve been helping our customers stand up new AI-powered real-time features, we’ve put together a complete set of tools that check all the boxes above.

We’ve rolled these tools and best practices into two big Open Source projects: Pipecat for server-side AI orchestration and the RTVI open standard for real-time inference clients. These are truly vendor neutral efforts, with a growing community and contributors from a wide range of stakeholders.

Now we’re filling another gap in the voice-to-voice and real-time ecosystem with Daily Bots.

- Daily Bots lets you run your RTVI/Pipecat AI agents end-to-end on Daily’s infrastructure.

- Start a real-time AI session with a single call to /api/v1/bots/start. Launch fast. Scale without limits. If your needs evolve beyond Daily Bots, you can take your code to another platform or stand up your own infrastructure.

AI that talks naturally

Human conversation is complicated!

We interrupt each other. We know when someone finishes speaking and expects us to talk. We change topics and go off on tangents.

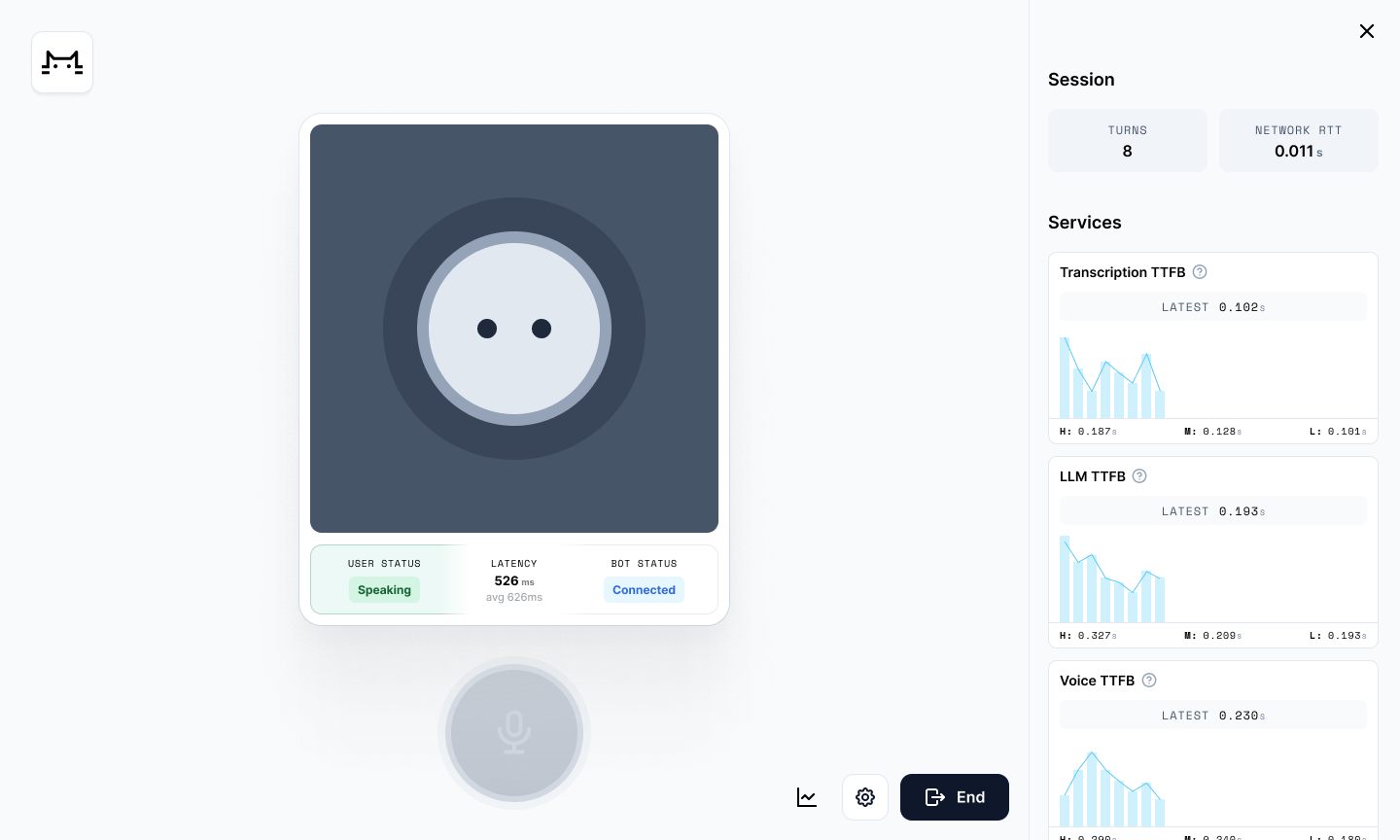

And, most of all, we almost always respond quickly. Long pauses make conversations feel so unnatural that most people will just opt out. It’s critical to have voice-to-voice response times faster than 1 second. (Faster than 800ms is better!)

Daily Bots implements best practices for all of the hard, low-level challenges that voice AI product teams face. With a few lines of code, developers can leverage:

- A modular architecture that enables easy switching between different LLMs and voice models. Use state-of-the-art LLMs with large parameter counts where needed. Or use models optimized for conversational response times.

- Multi-turn context management, with tool calling and vision input.

- Voice-to-voice response times as low as 500ms.

- Interruption handling with word-level context accuracy.

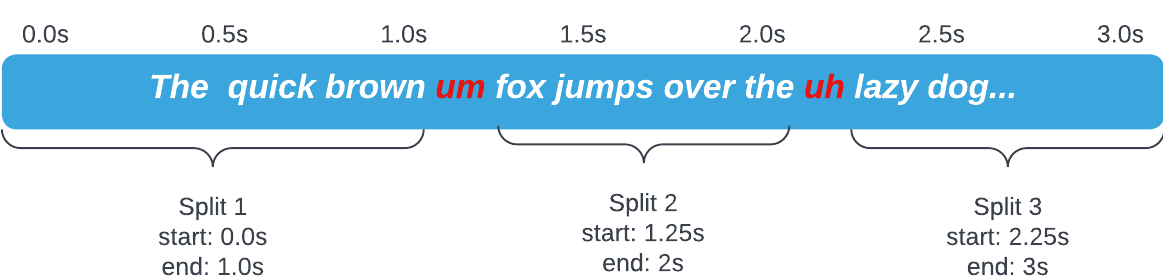

- Phrase endpointing that combines voice activity detection, semantic cues, and noise-level averaging.

- Echo cancellation and background noise reduction.

- Metrics and observability down to the level of individual media streams from every session.

Flexibility to use the best models, and the best models for your use case

Daily Bots developers can use both commercial and open models. You can use our integrated LLMs, or "Bring Your Own (API) Key" (BYOK) for your preferred service.

We’ve directly integrated with Anthropic, Cartesia, Deepgram, and Together AI.

- Anthropic’s Claude 3.5 Sonnet is an excellent multi-turn conversational model. Daily Bots includes support for Sonnet’s vision input, tool calling, and the brand new context caching feature.

- Cartesia’s Sonic voice model has raised the bar for voice quality at extremely low latencies. Cartesia offers a wide range of excellent voices, plus the ability to create your own voices.

- Deepgram is a long-time Daily partner and the long-time leader in real-time speech to text accuracy and multi-language support.

- Together AI delivers fast, high quality inference for all three sizes of Meta’s Llama 3.1 LLMs: 8B, 70B, and 405B.

With all of these partners, we do consolidated billing. You get just one bill from Daily, with line items showing your usage of each model. Also, it’s likely that you will benefit from higher rate limits and lower pricing when you use our partners’ services through Daily Bots. See Daily Bots pricing here.

Of course, you can always BYOK for both our partners and other services.

We can support any LLM provider that offers OpenAI-compatible APIs. We work regularly with OpenAI, Groq, and Fireworks, for example.

If you need custom models, our partners offer fine-tuned models and inference services for enterprise customers.

Daily Bots infrastructure can also be deployed inside your Virtual Private Cloud. If you manage your own inference, co-locating orchestration compute with inference has latency, cost, and compliance benefits.

Build now, and for the future

Our goal with Daily Bots is to accelerate the development of real-time, multimodal AI.

With a few lines of code, configure bots that scale on demand, on Daily’s infrastructure, automatically keeping pace as your application’s usage increases.

Write clients for iOS, Android, and the Web using the RTVI Open Source SDKs and Daily Bots helper libraries.

Buy phone numbers from Daily and make your bots accessible via dial-in.

All of this runs on Daily’s Global Mesh Network. Our distributed points of presence deliver 13ms first-hop latency to 5 billion people on six continents. (A little more, on average, if you happen to be in Antarctica.)

It’s also worth noting that Daily Bots is only one of your options, if you’re building real-time AI agents on the Open Source toolkits we use at Daily.

Definitely go check out Vapi and Tavus, for example. They’ve developed specialized technology, and best practices, to support different applications of multimodal inference. Vapi has great voice APIs, with user-friendly dashboards and excellent telephony support. Tavus’s Conversational Video Interface powers AI apps that can speak, hear, and see naturally. We’re proud these innovative platforms also leverage Daily’s WebRTC infrastructure.

If you’re interested in real-time AI, you can leverage Tavus or Vapi; build on the Daily Bots Open Source cloud; or strike out on your own and stand up your own Pipecat-based infrastructure!

Demos, demos, demos & starting out

We’ve had a ton of fun building out Daily Bots.

- Check out Chad’s function-calling weather reporter, and our ExampleBot playground demo. There's also a vision demo, and iOS and Android.

- Get started with a special launch week $10 credit by signing up for Daily Bots here. Or go straight to the docs.

AI is moving fast! Check out Vapi and Tavus. Join the Daily community on Discord. Let us know if you find Daily Bots, RTVI, and Pipecat useful. We’re excited to build the future with you.

]]>

Speed is important for voice AI interfaces. Humans expect fast responses in normal conversation – a response time of 500ms is typical. Pauses longer than 800ms feel unnatural.

Source code for the bot is here. And here is a demo you can interact with.

Technical tl;dr

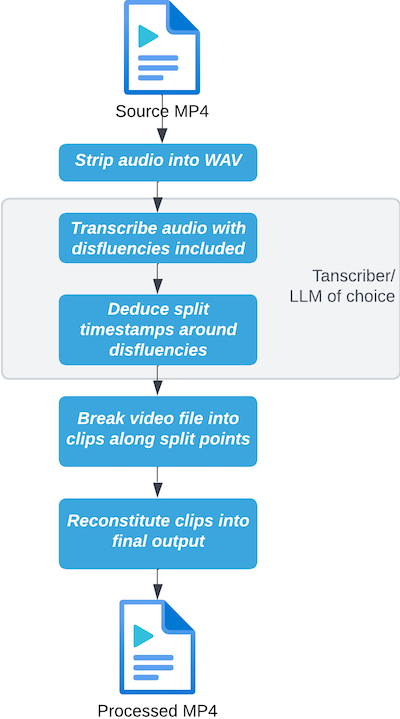

Today’s best transcription models, LLMs, and text-to-speech engines are very good. But it’s tricky to put these pieces together so that they operate at human conversational latency. The technical drivers that are most important, when optimizing for fast voice-to-voice response times are:

- Network architecture

- AI model performance

- Voice processing logic

Today’s state-of-the-art components for the fastest possible time to first byte are:

- WebRTC for sending audio from the user’s device to the cloud

- Deepgram’s fast transcription (speech-to-text) models

- Llama 3 70B or 8B

- Deepgram’s Aura voice (text-to-speech) model

In our original demo, we self-host all three AI models – transcription, LLM, and voice generation – together in the same Cerebrium container. Self-hosting allows us to do several things to reduce latency.

- Tune the LLM for latency (rather than throughput).

- Avoid the overhead of making network calls out to any external services.

- Precisely configure the timings we use for things like voice activity detection and phrase end-pointing.

- Pipe data between the models efficiently.

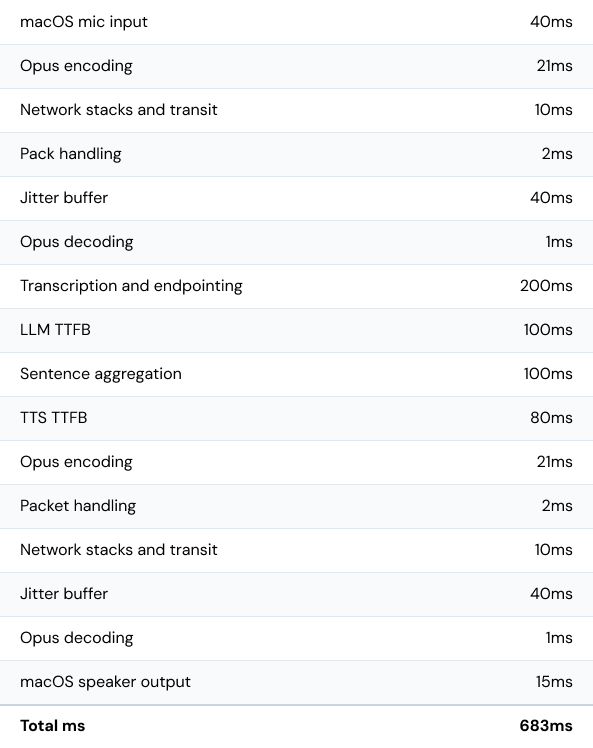

We are targeting an 800ms median voice-to-voice response time. This architecture hits that target and in fact can achieve voice-to-voice response times as low as 500ms.

Optimizing for low latency: models, networking, and GPUs

The very low latencies we are targeting here are only possible because we are:

- Using AI models chosen and tuned for low latency, running on fast hardware in the cloud.

- Sending audio over a latency-optimized WebRTC network.

- Colocating components in our cloud infrastructure so that we make as few external network requests as possible.

AI models and latency

All of today’s leading LLMs, transcription models, and voice models generate output faster than humans speak (throughput or tokens per second). So we don’t usually have to worry much about our models having fast enough throughput.

On the other hand, most AI models today have fairly high latency relative to our target voice-to-voice response time of 500ms. When we are evaluating whether a model is fast enough to use for a voice AI use case, the kind of fast we’re measuring and optimizing is the latency kind.

We are using Deepgram for both transcription and voice generation, because in both those categories Deepgram offers the lowest-latency models available today. Additionally, Deepgram’s models support “on premises” operation, meaning that we can run them on hardware we configure and manage. This gives us even more leverage to drive down latency. (More about running models on hardware we manage, below.)

Deepgram’s Nova-2 transcription model can deliver transcript fragments to us as quickly as 100ms. Deepgram’s Aura voice model running in our Cerebrium infrastructure has a time to first byte as low as 80ms. These latency numbers are very good! The state of the art in both transcription and voice generation are rapidly evolving, though. We expect lots of new features, new commercial competitors, and new open source models to ship in 2024 and 2025.

Llama 3 70B is among the most capable LLMs available today. We’re running Llama 3 70B on NVIDIA H100 hardware, using the vLLM inference engine. This configuration can deliver a median time to first token (TTFT) latency of 80ms. The fastest hosted Llama 3 70B services have latencies approximately double that number. (Mostly because there is significant overhead in making a network request to a hosted service.) Typical TTFT latencies from larger-parameter SOTA LLMs are 300-400ms.

WebRTC networking for voice AI

WebRTC is the fastest, most reliable way to send audio and video over the Internet. WebRTC connections prioritize low latency and the ability to adapt quickly to changing network conditions (for example, packet loss spikes). For more information about the WebRTC protocol and how WebRTC and WebSockets complement each other, read this short explainer.

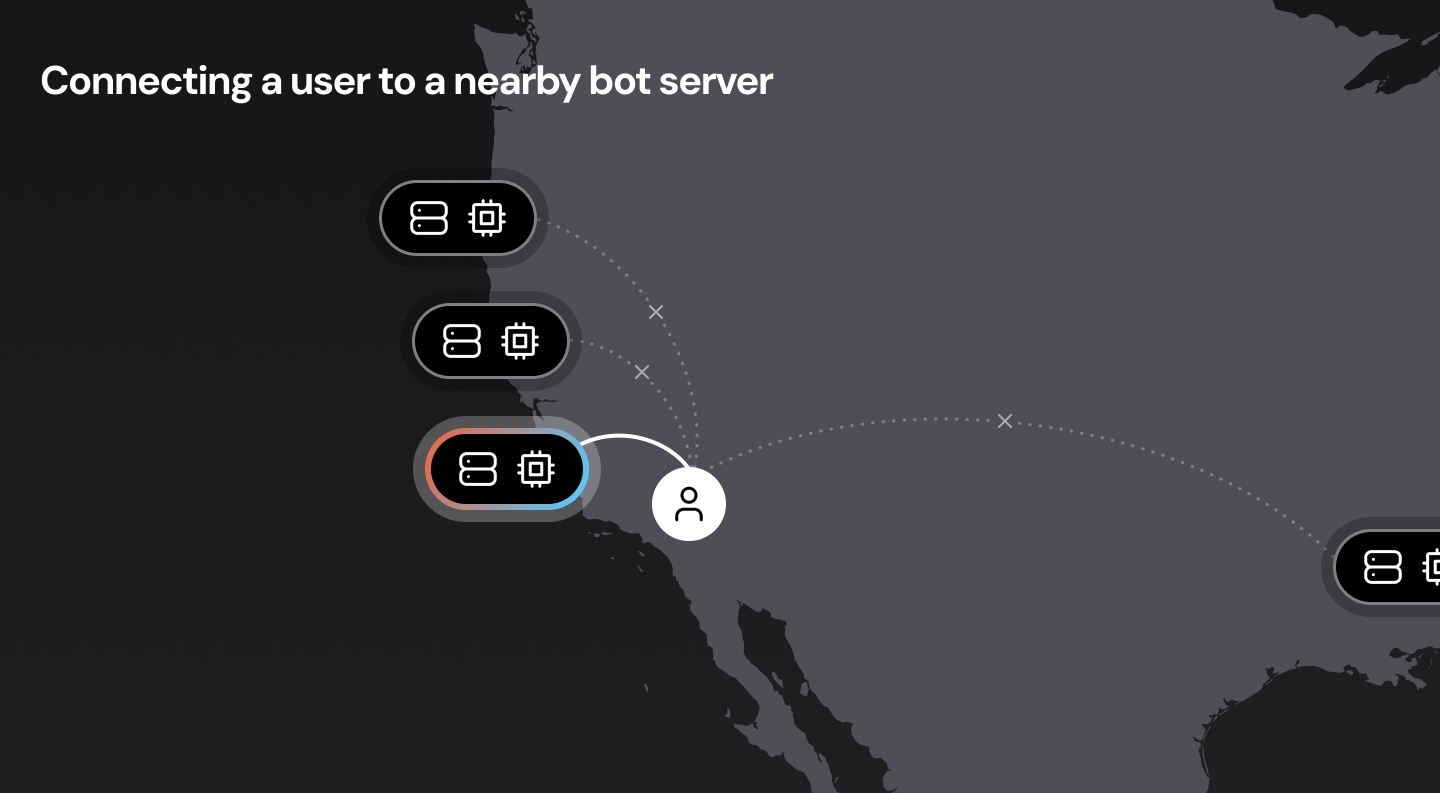

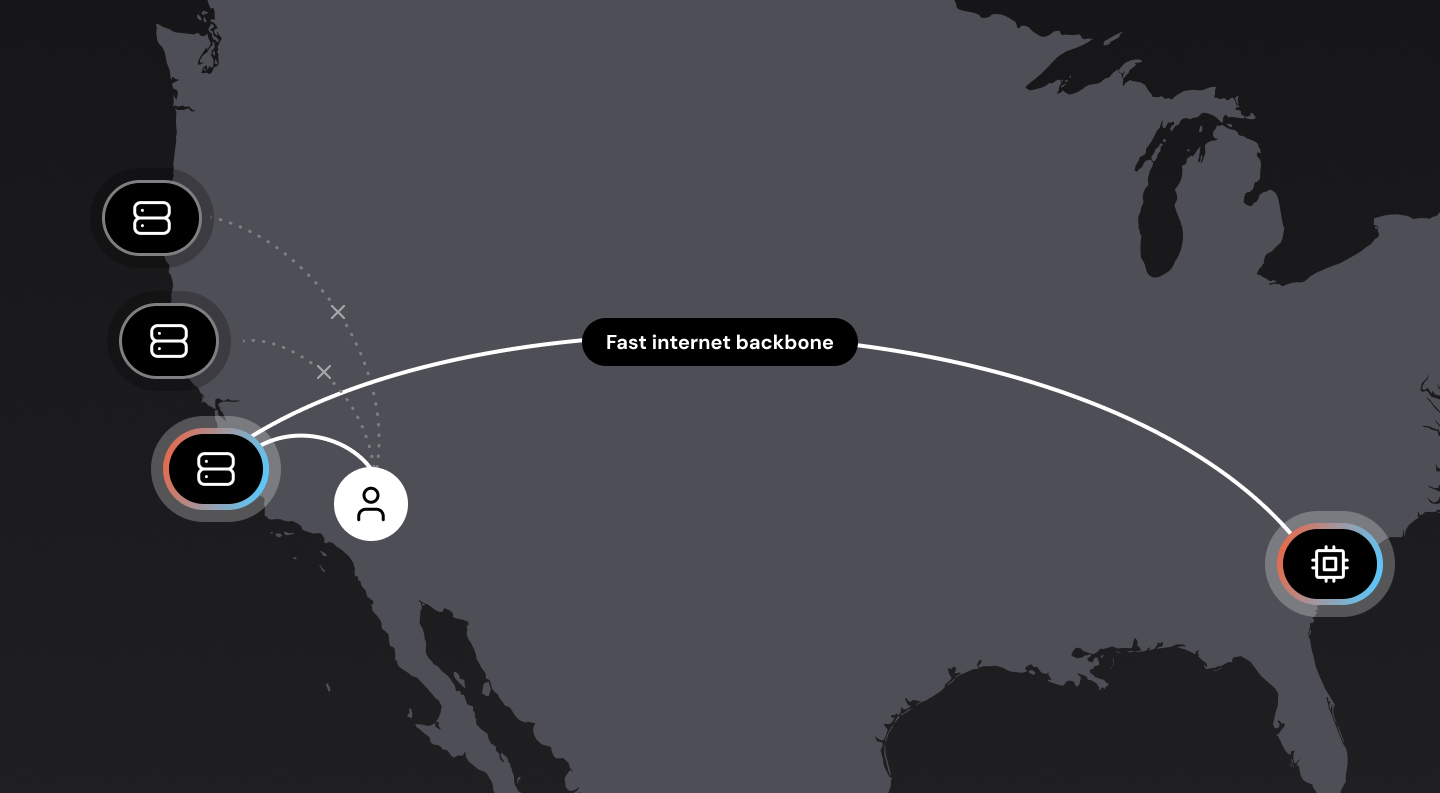

Connecting users to nearby servers is also important. Sending a data packet round-trip between San Francisco and New York takes about 70ms. Sending that same packet from San Francisco to, say, San Jose takes less than 10ms.

In a perfect world, we would have voice bots running everywhere, close to all users. This may not be possible, though, for a variety of reasons. The next best option is to design our network infrastructure so that the “first hop” from the user to the WebRTC cloud is as short as possible. (Routing data packets over long-haul Internet connections is significantly slower and more variable than routing data packets internally over private cloud backbone connections.) This is called edge or mesh networking, and is important for delivering reliable audio at low latency to real-world users. If you’re interested in this topic, here’s a deep dive into WebRTC mesh networking.

Where the components run – self-hosting the LLM and voice models

The code for an AI voice bot is usually not terribly large or complicated. The bot code manages the orchestration of transcription, LLM context-management and inference, and text-to-speech voice generation. (In many applications, the bot code will also read and write data from external systems.)

But, while voice bot logic is often simple enough to run locally on a user’s mobile device or in a web browser process, it almost always makes sense to run voice bot code in the cloud.

- High-quality, low-latency transcription requires cloud computing horsepower.

- Making multiple requests to AI services – transcription, LLM, text-to-speech – is faster and more reliable from a server in the cloud than from a user’s local machine.

- If you are using external AI services you need to proxy them or access them only from the cloud to avoid baking API keys into client applications.

- Bots may need to perform long-running processes, or may need to be accessible via telephone as well as browser/app.

Once you are running your bot code in the cloud, the next step in reducing latency is to make as few requests out to external AI services as possible. We can do this by running the transcription, LLM, and text-to-speech (TTS) models ourselves, on the same computing infrastructure where we run the voice bot code.

Colocating voice bot code, the LLM, and TTS in the same infrastructure saves us 50-200ms of latency from network requests to external AI services. Managing the LLM and TTS models ourselves also allows us to tune and configure them to squeeze out even more latency gains.

The downside of managing our own AI infrastructure is additional cost and complexity. AI models require GPU compute. Managing GPUs is a specific devops skill set, and cloud GPU availability is more constrained than general compute (CPU) availability.

Voice AI latency summary – adding up all the milliseconds

So, if we’re aiming for 800ms median voice-to-voice latency (or better) what are the line items in our latency “budget?”

Here’s a list of the processing steps in the voice-response loop. These are the operations that have to be performed each time a human talks and a voice bot responds. The numbers in this table are typical metrics from our reasonably well optimized demo running on NVIDIA containers hosted by Cerebrium.

Next steps

For some voice agent applications, the cost and complexity of managing AI infrastructure won’t be worth taking on. It’s relatively easy today to achieve voice-to-voice latency in the neighborhood of two to four seconds using hosted AI services. If latency is not a priority, there are many LLMs that can be accessed via APIs and have time to first token metrics of 500-1500ms. Similarly, there are several good options for transcription and voice generation that are not as fast as Deepgram, but deliver very high quality text-to-speech and speech-to-text.

However, if fast, conversational voice responsiveness is a primary goal, the best way to achieve that with today’s technology is to optimize and colocate the major voice AI components together.

If this is interesting to you, definitely try out the demo, read the demo source code (and experiment with the Pipecat open source voice AI framework), and learn more about Cerebrium's fast and scalable AI infrastructure.

]]>Unlock enhanced video quality and performance with Daily Adaptive Bitrate, combining ultra-reliable calls and the best visual experience your network can offer—automatically adjusting in real-time to suit fluctuating network conditions.

Since its launch, Daily has been at the forefront of providing the most advanced simulcast APIs of any WebRTC provider. Developers who choose Daily benefit from complete flexibility and control, enabling them to optimize the performance of their video applications across various devices and network conditions. This level of customization allows developers to fine-tune the user experience to match their specific goals.

Simulcast is an invaluable feature for video applications, enabling developers to balance between quality and reliability. It can, however, be challenging to implement when seeking the perfect middle ground for all connected peers in real-world, changing network conditions. Adjusting simulcast settings in real-time to maintain performance requires on-going peer network monitoring, client-side logic, and can be tricky to debug.

Imagine a scenario where developers no longer need to worry about optimizing for quality, and can instead have full confidence that video will automatically look its best within the available bandwidth. This would greatly simplify development, allowing engineers to focus on other aspects of their application.

Daily Adaptive Bitrate (ABR)

Daily Adaptive Bitrate is an industry-first innovation that automatically adjusts the quality of video to ensure maximum performance without compromising reliability.

When network is constrained, the bitrate and resolution will be dropped to ensure that the call remains connected (ensuring enough throughput for audio.) When there’s bandwidth headroom, the bitrate and resolution will be increased to deliver higher quality video.

It doesn’t require any pre-configured video settings, or client side network monitoring and adjustment.

- In 1:1 calls, only a single dynamic layer of video is sent, saving bandwidth and allowing for higher overall video quality.

- In multi-party calls, the top layer is always adaptive based on network conditions and lower layers are used for smaller UI elements (such as sidebars or large grids) or as a fallback for poor network conditions.

Let’s take a look at an example...

Here are some typical simulcast settings that are somewhat conservative to optimize for reliability:

- High layer:

{ maxBitrate: 700 kbps, targetResolution: 640x360, maxFramerate: 30 fps } - Medium layer:

{ maxBitrate: 200 kbps, targetResolution: 427x240, maxFramerate: 15 fps } - Low layer:

{ maxBitrate: 100 kbps, targetResolution: 320x180, maxFramerate: 15 fps }

A user joins a 1:1 call from a WiFi network with speeds of 5 Mbps down, 1 Mbps up. Upon joining the call, due to the network’s slow upload speed, the user is unable to send all three layers. The available options are to either: a) drop the framerate, or b) drop the highest layer.

As a result, this user will:

- ⬇️ Send - 360p video @ 15 fps, or 240p video @ 15 fps

- ⬆️ Receive - 360p video @ 30 fps

With Daily Adaptive Bitrate enabled, Daily will automatically optimize the experience based on available bandwidth. Given the 5 Mbps down / 1 Mbps up network, the user will:

- ⬇️ Send - 540p video @ 30 fps (around 800 kbps)

- ⬆️ Receive - 720p video @ 30 fps (around 2 Mbps)

Compared to a hardcoded simulcast configuration, this is a dramatic increase in call quality for the user that doesn't sacrifice call reliability.

Getting started

Daily Adaptive Bitrate has been rigorously tested at scale for some time now. It is enabled by default for all 1:1 calls, or can be manually configured by following these steps:

- Set the

enable_adaptive_simulcastproperty totruefor either your domain (e.g. all calls) or room (e.g. specific calls). - If you’re a Prebuilt user, no additional configuration is needed.

- If you’ve built a custom app with daily-js, please update to version

0.60.0or0.61.0for daily-react-native. - The only code change required is to set the

allowAdaptiveLayerswithin thesendSettingsproperty totrueat join time:

const call = Daily.createCallObject();

call.join({

sendSettings: {

video: {

allowAdaptiveLayers: true

}

}

});Please note that Daily Adaptive Bitrate currently works best on Chrome and Safari (both desktop and mobile). Firefox support will ship mid-year, although users on Firefox can still join the call unimpeded sending video using 3-layer simulcast.

Multi-party (>2 participants) are currently in beta – please contact us if you'd like to take part in testing.

For more information regarding Daily Adaptive Bitrate, please refer to our documentation here. We’re excited to see how this feature improves both the developer and end-user experience on the Daily platform. As always, for any questions or feedback, feel free to reach out.

]]>I’ve talked before on this blog about the surprising complexities of recording WebRTC calls. Today I’d like to discuss the case of web page recording more widely.

The solutions explained here also have relevance to web application UI architecture in general. I’m going to show you some ideas for layered designs that enable content-specific acceleration paths. This is something web developers typically don’t need to think about very often, but it can be crucial to unlocking high performance. Examining the case of web page recording can help to understand why.

Daily’s video rendering engine VCS is designed for this kind of layered architecture, so you can apply these ideas directly on Daily’s platform. At the end of this post, I’ll talk more about VCS specifically and how web page recording fits into the picture.

Three types of web capture

First we should define more precisely what’s meant by “recording”. We’re talking about using a web browser application running on a server in a so-called headless configuration (i.e., no display or input devices are connected).

With such a setup, there are several ways to capture content from a web page:

- Extracting text and images, and perhaps downloading embedded files like video. This is usually called scraping. The idea is to capture only the content that is of particular interest to you, rather than a full snapshot of a web application’s execution. Crawling, as done by search engines, is an adjacent technique (traditionally crawlers didn’t execute JavaScript or produce an actual browser DOM tree, but today a lot of content is in web applications that can’t be parsed without).

- Taking screenshots of the browser at regular intervals or after specific events. This is usually in the context of UI testing, and more generally, falls under the umbrella of browser automation. You might use the produced screenshots to compare them with an expected state (i.e., a test fails if the browser’s output doesn’t match). Or the screenshots could be consumed by a “robot” that recognizes text content, infers where UI elements are located, and triggers events to use the application remotely.

- Capturing a full A/V stream of everything the browser application outputs, both video and audio, and encoding it into a video file. This is effectively a remote screen capture on a server computer that doesn’t have a display connected.

In this post, I’ll focus on the last kind of headless web page recording: capturing the full A/V stream from the browser. Because we’re capturing the full state of the browser’s A/V output, it is much more performance-intensive than the more commonplace scraping or browser automation.

Those use cases can get away with capturing images only when needed. But for the A/V stream, the browser’s rendering needs to be captured at a stable 30 frames per second. Everything that can happen within the web page needs to be included: CSS animations and effects, WebRTC, WebGL, WebGPU, WebAudio, and so on. With the more common browser automation scenario, you have the luxury of disabling browser features that don’t affect the visual state snapshots you care about. But this is not an option for remote screen capture.

So why would you even want this? Clearly it’s more of a niche case compared to scraping and browser automation, which are massively deployed and well understood. The typical scenario for full A/V capture is that you have a web application with rich content like video and dynamic graphics, and you want to make a recording of everything that happens in the app without requiring a user to activate screen capture themselves. When you’ve already developed the web UI, it seems like the easy solution would be to just capture a screen remotely. Surely that’s a solved problem because the browser is so ubiquitous…?

Unfortunately it’s not quite that simple. But before we look at the details, let’s do a small dissection of an example app.

What to record in a web app

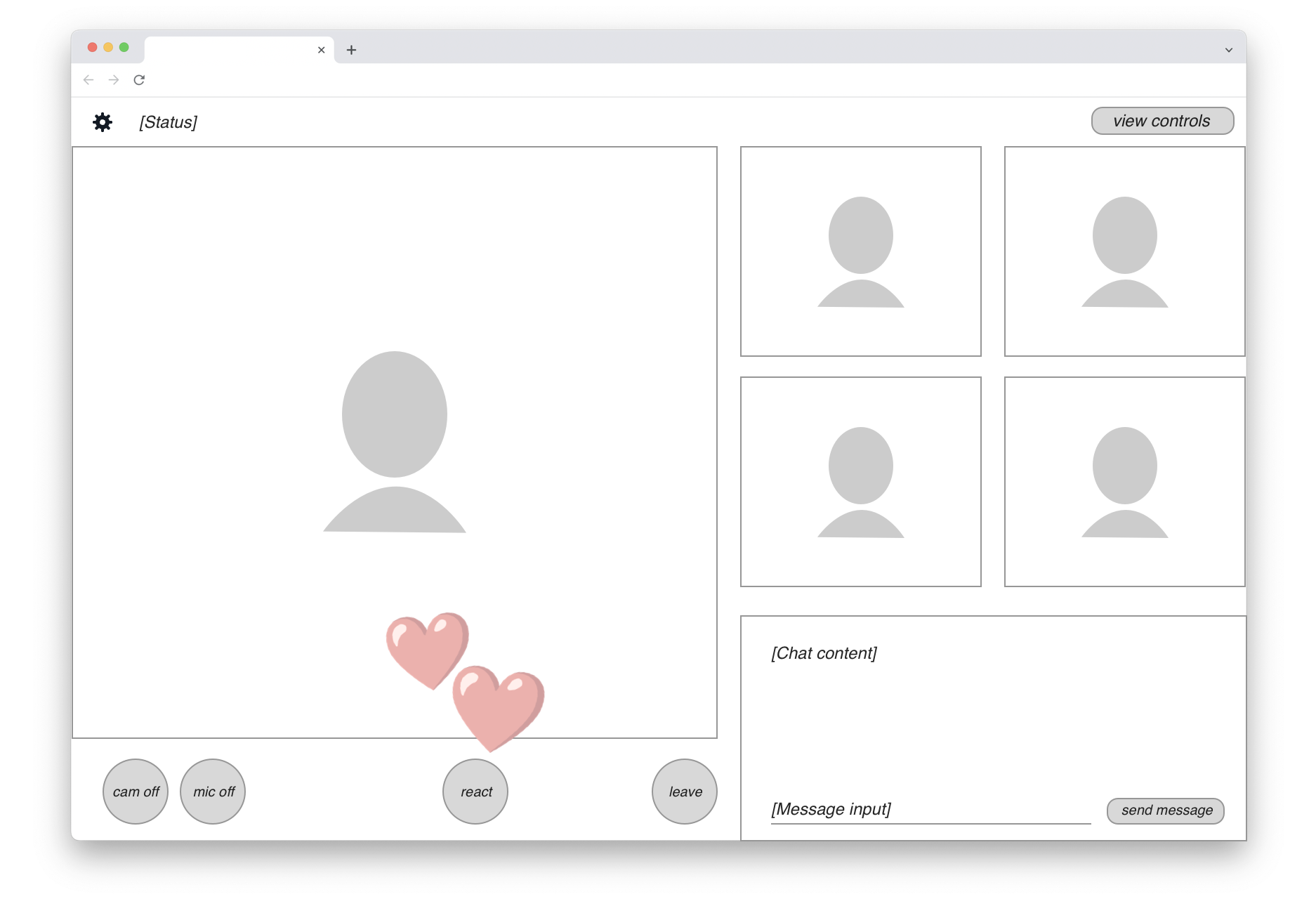

The following UI wireframe shows a hypothetical web-based video meeting app, presumably implemented on WebRTC:

There are up to five participant video streams displayed. In the bottom-right corner, a live chat view is available to all users. In the bottom-left row, we find standard video call controls and a “React” button that lets users send emojis. When that happens, an animated graphic is rendered on top of the video feed (shown here by the two floating hearts).

Recording a meeting like this means you probably want a neutral viewpoint. In other words, the content shown in the final recording should be that of a passive participant who doesn’t have any of the UI controls.

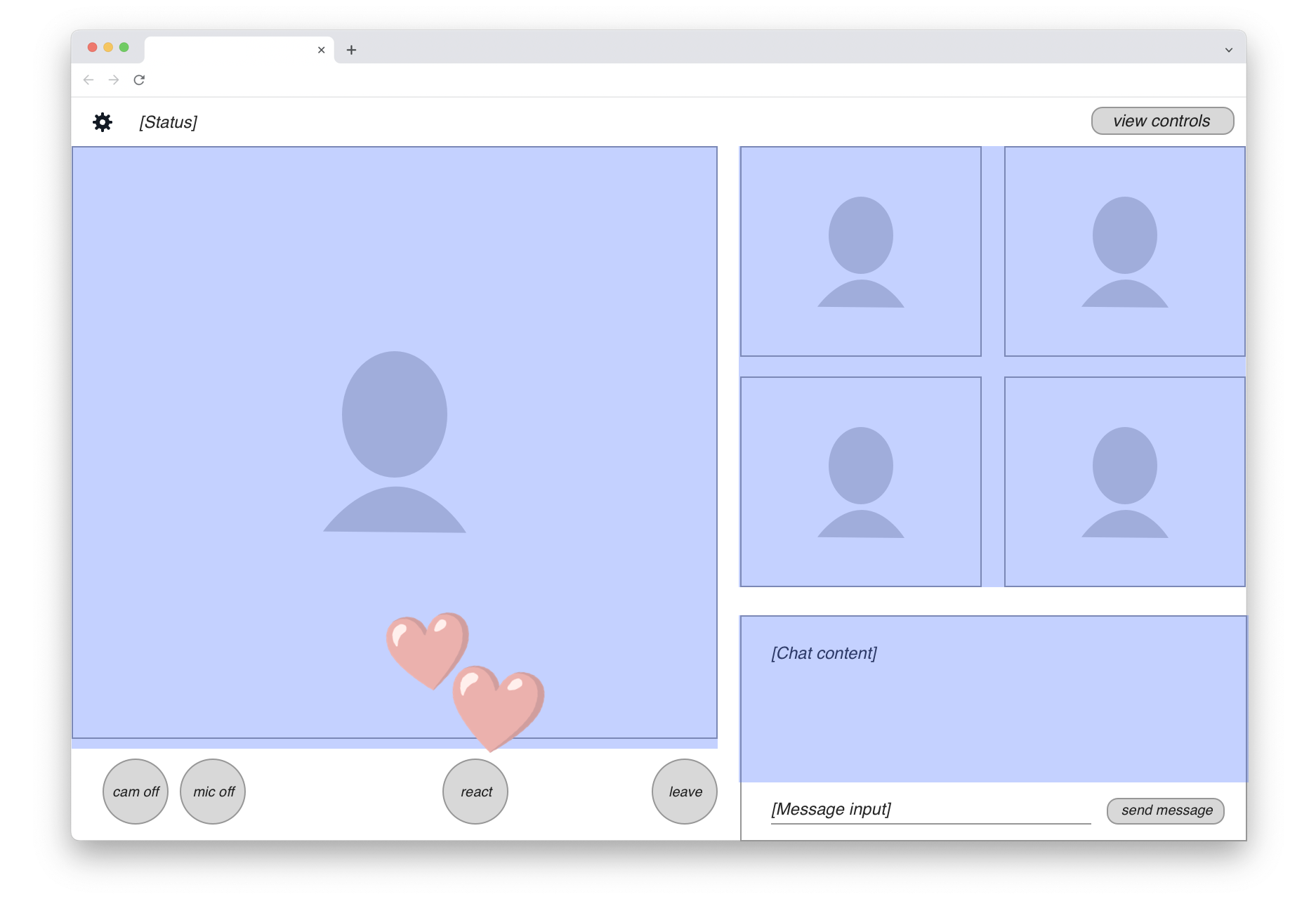

The content actually needed for the headless recording is marked with a blue highlight in this drawing:

We can see that the majority of UI elements on the page should actually be excluded from the recording. So in fact, the “web page recording” we seek isn’t quite as straightforward as just running a screen capture. We’ll clearly need to do some front-end engineering work to create a customized view of the application for the recording target.

Assuming this development work is done, where can we then run these remote screen capture jobs? Here is the real rub.

Competing for the hottest commodity in tech

The web browser offers a very rich palette of visual capabilities to application developers. CSS includes animations, transitions, 3D layer transformations, blending modes, effects like Gaussian blur, and more — all of which can be applied together. On top of that we’ve got high-performance rendering APIs like Canvas, WebGL and today also WebGPU. If you want to capture real web apps at 30 fps, you can’t easily pick a narrow subset of the capabilities to record. It’s all or nothing.

Intuitively the browser feels like a commodity application because it runs well on commodity clients like cheap smartphones, low-end laptops, and other devices. But this is achieved by extensive optimization for the modern client platform. The browser relies on client device GPUs for all of its visual power. A mid-range smartphone that can run Chromium with the expected CSS bells and whistles has an ARM CPU and an integrated GPU, both fully available to the browser application.

Commodity servers are a very different hardware proposition. An ordinary server has a fairly high-end Intel/AMD CPU, but it’s typically virtualized and shared by many isolated programs running on the same hardware. Crucially, there is no GPU on this commodity server. This means that all of Chromium’s client-oriented rendering optimizations are unavailable.

It’s possible to get a server with a GPU, but these computers are nothing like the simple smartphone or laptop for which Chromium is optimized. GPU servers are designed for the massive number crunching required by machine learning and AI applications. These special GPUs can cost tens of thousands of dollars and they include large amounts of expensive VRAM. All this special hardware goes largely unused if you use such a GPU to render CSS effects and some video layers that a Chromebook could handle.

At the moment of this writing, the situation is even worse because these GPU servers happen to be the hottest commodity in the entire tech industry. Everybody wants to do AI. The demand is so massive that Nvidia, the main provider of these chips, has taken an active role in picking which customers actually get access. This was reported by The Information:

Nvidia plays favorites with its newest, much-sought-after chips for artificial intelligence, steering them to small cloud providers who compete with the likes of Amazon Web Services and Google. Now Nvidia is also asking some of those small cloud providers for the names of their customers—and getting them—according to two people with direct knowledge.

It's reasonable that Nvidia would want to know who’s using its chips. But the unusual move also could allow it to play favorites among AI startups to further its own business. It’s the latest sign that the chipmaker is asserting its dominance as the major supplier of graphics processing units, which are crucial for AI but in short supply.

In this situation, using server GPUs for web page recording would be like taking a private jet to go to work every morning. It’s technically possible, but you’d need an awfully good reason and some deep pockets.

There are ways to increase efficiency by packing multiple browser capture jobs on one GPU server. But you’d still be wasting most of the expensive hardware’s capabilities. Nvidia’s AI/ML GPUs are designed for high-VRAM computing jobs, not the browser’s GUI-oriented graphics tasks where memory access is relatively minimal.

Let’s think back to the private jet analogy. If you have a jet engine but your commute is only five city blocks, it doesn’t really help at all if you ask all your neighbors to join you on the plane trip — it’s still the wrong vehicle to get you to work. Similarly, with the server GPUs, there’s a fundamental mismatch between your needs and the hardware spec.

Why generic hardware needs specialized software

Is there a way we could render the web browser’s output on those commodity CPU-only servers instead? The problem here lies in the generic nature of the browser platform combined with the implicit assumptions of the commodity client hardware.

I noted above that capturing a web app ends up being “all or nothing” — a narrow subset of CSS is as good as useless. A browser automation system has more freedom here. It can execute on the CPU because it has great latitude for trade-offs across several dimensions of time and performance:

- When to take its screenshots

- How much rendering latency it tolerates

- Which expensive browser features to disable

In other words, browser automation can afford wait states and it can skip animations, but remote screen capture can’t. It must be real-time.

Fundamentally we have here a performance sinkhole created from combining two excessively generic systems. Server CPUs are a generic computing solution, not optimized for any particular application. The web browser is the most generic application platform available. Multiplying compromise by another compromise is like multiplying small fractions — the product is less than the individual components. Without any specialized acceleration on either the hardware or software side, we’re left in a situation where 30 frames per second on arbitrary user content remains an elusive dream.

Maybe we just use more CPU cores in the cloud? That’s a common solution, but it quickly becomes expensive, and it’s still susceptible to web content changes that kill performance.

For example, you can turn any DOM element within a web page into a 3D plane by adding perspective and rotateX CSS transform functions to it. Now your rendering pipeline suddenly has to figure out questions like texture sampling and edge antialiasing for this one layer. Even with many CPU cores, this will be a massive performance hit. And if you try to prevent web developers from using this feature, there’s always the next one. It becomes an endless whack-a-mole of CSS properties which will frustrate developers using your platform as the list of restrictions grows with everything they try.

Given that we can’t provide acceleration on the hardware side (those elusive server GPUs…), the other option left is to accelerate the software.

Designing for layered acceleration

At this point, let’s take another look at the hypothetical web app whose output we wanted to capture up above.

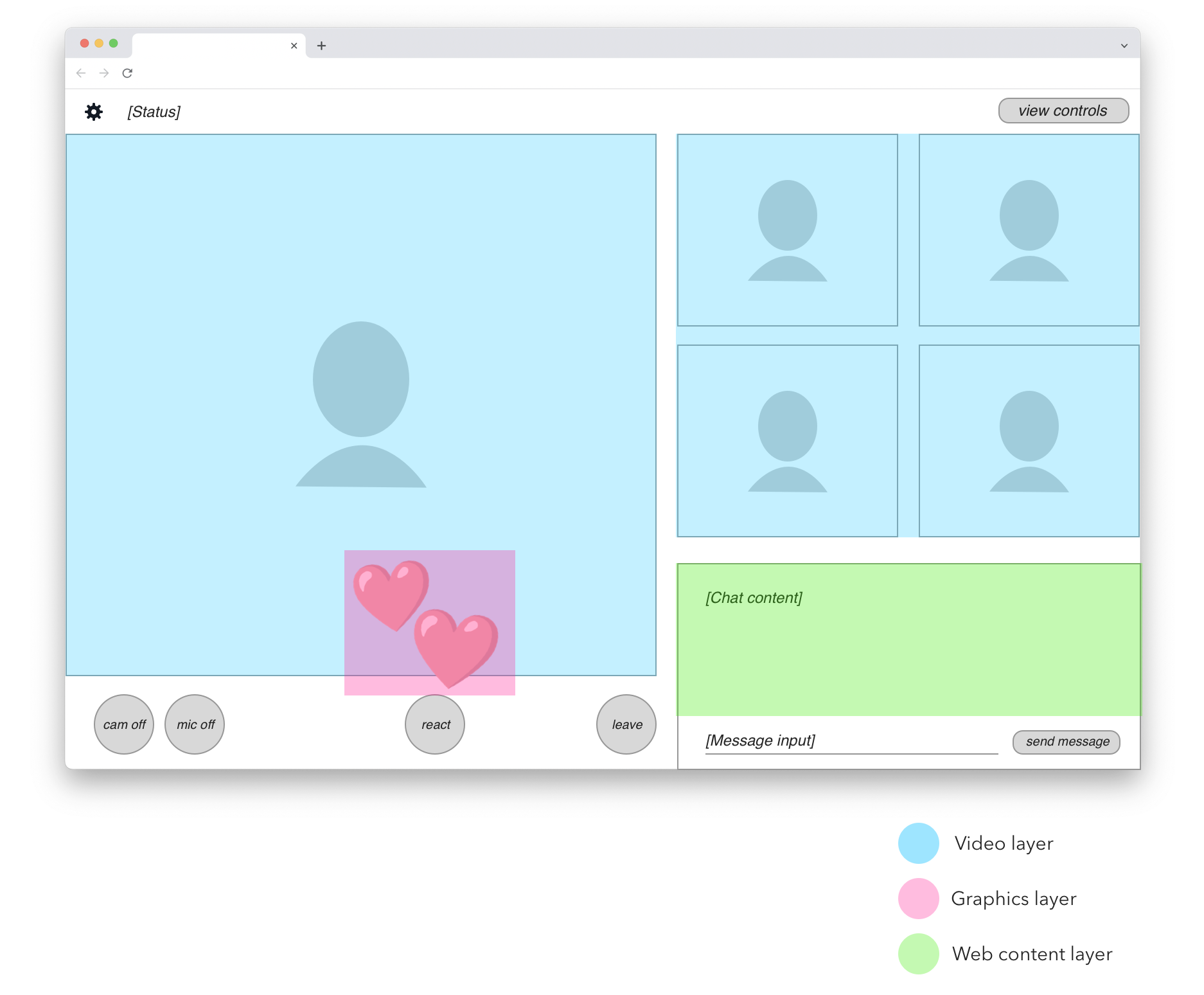

Within the UI area to be recorded, we can identify three different types of content. They are shown here in blue, pink, and green highlights:

In blue we have participant videos. These are real-time video feeds decoded from data received over WebRTC.

In pink we have animated overlay graphics. In this simplified example, it’s only the emoji heart reactions. In a real application we would probably identify other graphical elements, such as labels or icons that are rendered on top of participant videos.

In green we have the shared chat view. This is a good example of web content that doesn’t require full 30 fps screen capture to be rendered satisfactorily. The chat view only updates every second at most, is not real-time latency sensitive, and doesn’t depend on CSS animations, video playback, or fancy WebGL. We can render this content on a much more limited browser engine than what’s required for complete web page recording.

Identifying these layers is key to unlocking the software-side acceleration I mentioned earlier. If we could split each of these three content types onto separate rendering paths and put them together at the last moment, we could optimize the three paths separately for reasonable performance on commodity servers.

The accelerated engine

Could we do this by modifying the web browser itself? Forking Chromium would be a massive development effort and we’d be scrambling to keep up with updates. But more fundamentally, technologies like CSS are simply too good at enabling developers to make tiny code changes that will completely break any acceleration path we can devise.

At Daily, we provide a solution in the form of VCS, the Video Component System. It’s a “front-end adjacent” platform that adopts techniques of modern web development, but is explicitly designed for this kind of layered acceleration.

With VCS, you can create React-based applications using a set of built-in components that always fall on the right acceleration path. For example, video layers in VCS are guaranteed to be composited together in their original video-specific color space, unlocking higher quality and guaranteed performance. There's no way a developer can accidentally introduce an unwanted color space conversion.

For content like the green-highlighted chat box in the previous illustration, VCS includes a WebFrame component that lets you embed arbitrary web pages inside the composition, very much like an HTML <iframe>. It can be scaled individually and remote-controlled with keyboard events. This way you can reuse dynamic parts of your existing web app within a VCS composition without wrecking the acceleration benefits for video and graphics.

VCS is available on Daily’s cloud for server-side rendering of recordings and live streams. With the acceleration-centric design, we can offer VCS as an integral part of our infrastructure, available for any and all recordings. It’s not a separately siloed service with complex pricing. That means you always save money, and we can guarantee that it will scale even if your needs grow quickly.

This post has more technical detail on how you might implement a layered web page recording on VCS. Look under the heading “Live WebFrame backgrounds”.

One more thing to consider… The benefits of layered acceleration can be more widely useful than just for servers. If you can structure your application UI this way, why not run the same code on clients too? For that purpose, we offer a VCS web renderer that can be embedded into any web app regardless of what framework you’re using. This lets you isolate performance-intensive video rendering into a managed “acceleration box” and focus on building the truly client-specific UI parts that are not shared with server-side rendering.

For a practical example of how to use the VCS web renderer, see the Daily Studio sample application. It’s a complete solution for Interactive Live Streaming where the same content can be rendered on clients or servers as needed.

Summary

In this post, we discussed the challenges and solutions to web page recording. Hardware acceleration is an expensive commodity, so Daily provides an alternative, more cost-effective solution in the form of our Video Component System which can include layered web content.

If you have any questions about VCS, don't hesitate to reach out to our support team or head over to our WebRTC community.

]]>Twilio recently announced that the Twilio Video WebRTC service will be turned off in December 2024.

On January 22 we hosted a live webinar for Twilio Video customers who are beginning the process of porting their products over to other video platforms.

Daily is a seamless migration option for Twilio Video customers. We provide all of the features of Twilio Video plus many more, have a long history of operating our industry’s most innovative WebRTC developer platform, and offer dedicated engineering resources to customers porting from Twilio. For more information about Daily, please visit our Twilio Migration Resources hub.

Below, we’ve embedded a video of the webinar, including the Q&A section. Underneath the video is a transcript.

Introduction to Twilio Video Migration webinar

Hi. Welcome to this conversation about porting telehealth applications from Twilio Video to other video platforms.

If you're here, it's likely you were impacted by the announcement in December that Twilio is leaving the video developer tools space.

Twilio is giving customers a year to transition.

We know how disruptive it is to have to change platforms. My hope is that our notes today will make things a little bit easier. At Daily, we've seen what approaches work well when porting between video platforms, and what approaches create risks.

Today, we'll:

- cover high-level best practices

- talk through the major choices you'll need to make, and

- try to give you a sense of timelines and resource requirements

If your engineering team knows your product codebase well and understands your Twilio Video implementation, you will be able to transition to a new platform without too much difficulty. If you need engineering support, the new platform vendor you are moving to should be able to help you, and there are excellent independent dev shops that specialize in video implementation.

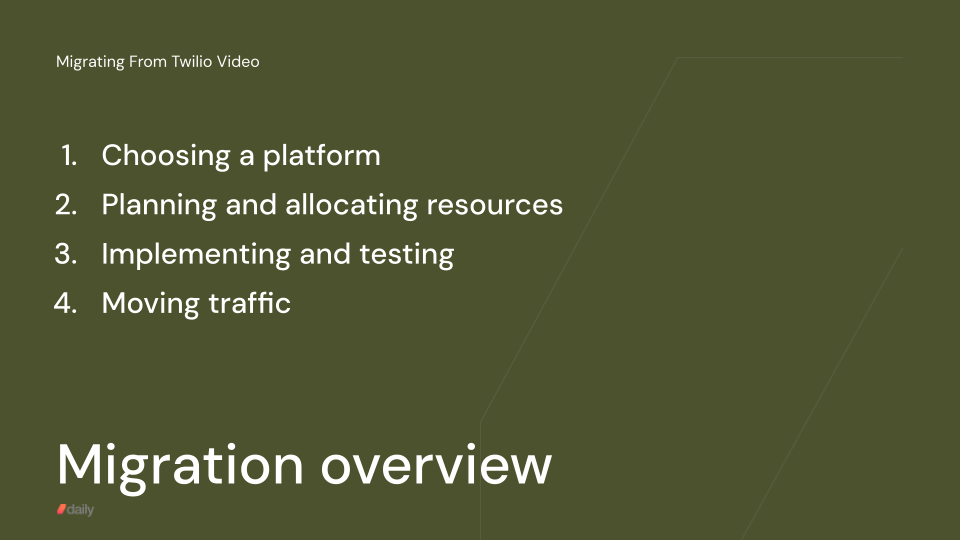

So, let's talk about the big tasks involved in porting your code. Here's how we break down a project:

Major components of a porting project

- choosing a new platform

- planning and allocating resources

- writing the code and testing it

- moving traffic

Choosing a platform

Build or buy

Twilio Video is a fully managed service. What our industry calls a PaaS, or Platform-as-a-Service.

When you migrate off of Twilio Video, you could migrate to another managed platform, or you could stand up, and manage, your own video infrastructure.

This is a classic software engineering build vs buy decision. I'm not going to spend too much time on this today, because the majority of the Twilio Video customers that we've talked to plan to stay in the managed platform world.

But we do often get asked about build vs buy in our space, so I'll cover this briefly, and at a high live. I really like talking about video tech, so if you want to dive deeper on this topic, come find me on Twitter or LinkedIn.

Here are the three most important things to consider when thinking through a build vs buy decision. We've found this is sometimes new information for engineering teams thinking about standing up their own video infrastructure.

First, managed services operate at significant economies of scale, so it's very hard to save money compared to paying someone else to run your video infrastructure. The rough rule of thumb is that you'll need to be paying a video platform about $3m/year before you can approach the break-even cost of paying for your own infrastructure directly.

Second, there are no off-the-shelf devops, autoscaling, observability, or multi-region components available for real-time video infrastructure. There are good open source WebRTC media servers, but they are building blocks for operating in production at scale, not complete solutions for operating in production at scale. It's easy to deploy an open source WebRTC server for testing. But for production, you'll need to build out quite a lot of custom devops tooling.

Third, real-time video infrastructure is a specific kind of high throughput content delivery network. Putting media servers in every geographic region where you have users turns out to be critical to making sure video calls work well on every kind of real-world network connection. You really want servers close to the edge of the network in the terms that our industry uses.

All of the major video platforms — Vonage, Chime, Zoom, Agora, Daily — have servers in two dozen or more data centers. And, today, the platforms that benchmark the best for video quality — Zoom, Agora, and Daily — have built out sophisticated "mesh network" infrastructure that routes video and audio packets from the edge of the network across fast internal backbones.

For all these reasons, I think a managed service is the right choice in almost all cases. I'm biased, of course, because Daily is a managed service. But I've walked through the numbers and the technical requirements here with dozens of customers of different shapes and sizes.

The big five: key factors in platform evaluation

So let's assume today that you are migrating from Twilio Video to another managed platform.

How do you choose which one?

Our recommended approach is to divide your platform evaluation into five topics.

- Reliability

- Quality

- Features and requirements

- Compliance

- Support

Reliability

There are two aspects of reliability: first, overall service uptime. Second, whether video sessions reliably connect and remain connected for any user, anywhere in the world, on any network.

The importance of uptime is obvious.

I won't say much more about this, other than that every vendor should have a status page and good due diligence here is to look back through all listed production incidents on the status page to get a sense of how your vendor approaches running infrastructure at scale.

Every large-scale production system has incidents. The goal for those of us who run these systems is to minimize both overall issues and the impact of any one issue. Everything should be -— as much as possible — redundant, heavily monitored, and over-provisioned.

In general, I think that system reliability is not a distinguishing factor for the major providers in our space. Those of us who have been running real-time video systems at scale for 6, 8, 10 years all have a track record of reliability and uptime.

If you're considering a newer vendor, though, it's definitely worth doing extra diligence about uptime.

The other aspect of reliability is a distinguishing factor between vendors. This is the connection success rate – will your users video sessions connect and stay connected, on any given day, for all of your users?

The big things to ask about here are:

- Does a vendor have infrastructure in every region where you have users? If not, many of those users will have to route via long-haul, public internet routes, and some of those calls will fail.

- Does a vendor heavily test and benchmark on a wide variety of real-world networks?

- Does a vendor heavily test and optimize on a wide variety of real-world devices, including older devices and mobile Safari? Device support is, surprisingly, not something that every video vendor prioritizes.

Quality

That last set of questions is a good transition to talking about video and audio quality.

Video and audio quality are about adapting to a wide range of real-world network conditions.

Delivering the best possible video and audio quality to every user, on every device, everywhere in the world is the second most important job your video vendor should be doing, second only to uptime.

Video and audio quality are critical because bad video experiences lead directly to user churn.

It turns out that platforms have made different amounts of investment in network adaptation, CPU adaptation, and infrastructure over the past five years. Video quality is an area where platform performance does differ.

It's worth understanding what each platform's focus and level of investment in infrastructure and SDK optimizations is. AWS Chime, for example, focuses on the customer support call center use case, and is not very good at adaptive video delivery to the full range of real-world networks and devices. Vonage has not invested in building out infrastructure at the edge of the cloud. Zoom and Agora both focus on native applications and don't fully support web browsers.

Telehealth video and audio quality: real-world conditions

It turns out that video and audio quality for telehealth use cases are challenging in three ways.

Many patients will be on cellular data networks. Many patients will be using mobile devices as opposed to laptop computers. And because installing software introduces significant friction for most users, many telehealth applications are built to run inside web browsers.

A platform vendor should be able to show you benchmarks that tell you how their platform performs on, for example, a poor cellular data or wifi network.

The goal should be to send good quality video whenever possible, and to gracefully degrade the video quality whenever there are network issues, while maintaining audio quality.

Benchmarking video quality

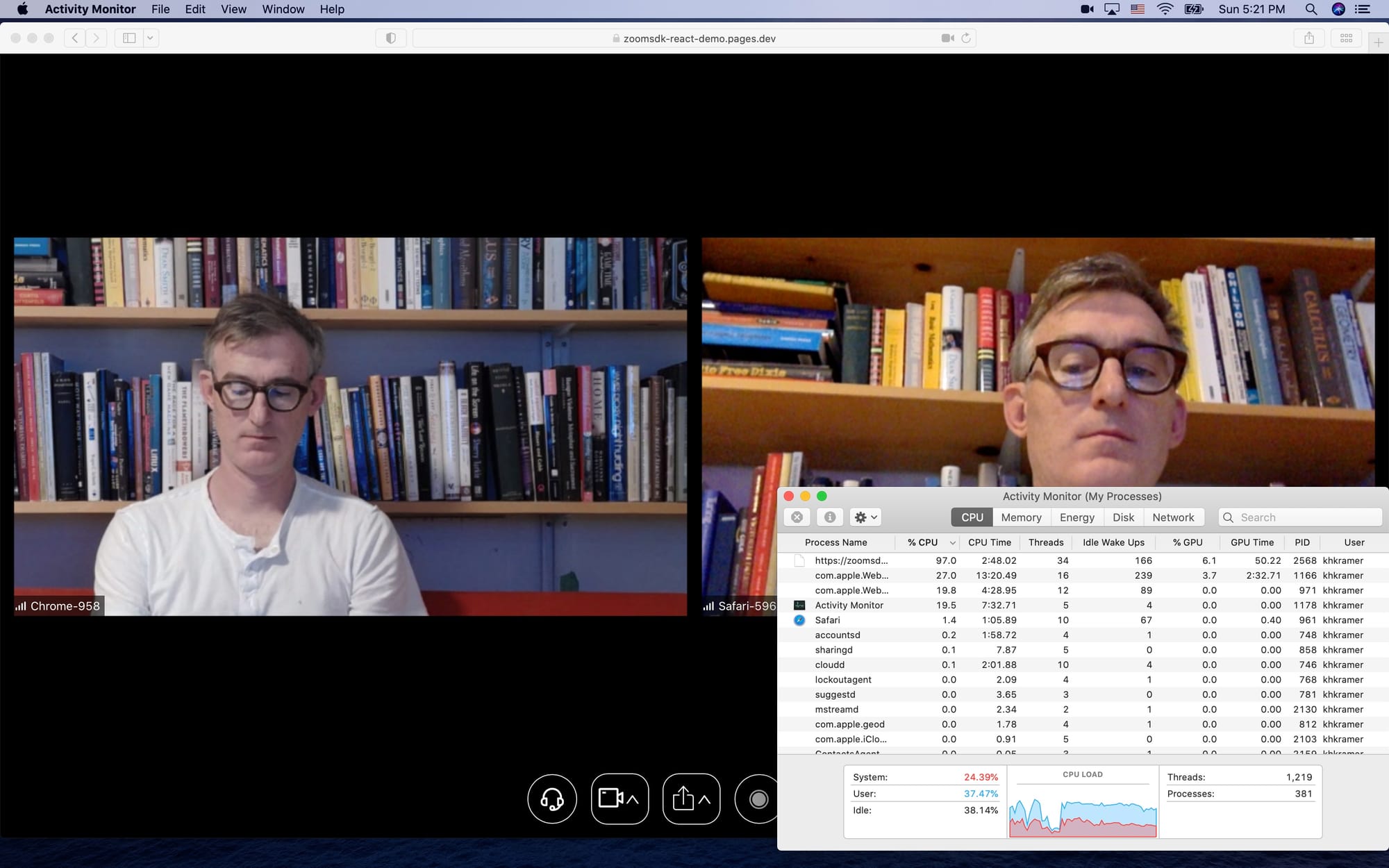

Here's a benchmark that shows video quality adapting to constrained network conditions. We do a lot of benchmarking at Daily. We benchmark our own performance intensively. And we benchmark against our competitors. Our benchmarks are transparent and replicable – we make our benchmarking code available because we think benchmarking is so important.

If you want to do your own independent testing, you can use our benchmarking code. Or you can work with an independent testing partner like testRTC or TestDevLab.

We'll come back to the topic of testing a little bit later.

Now let's talk about a close cousin to testing: observability.

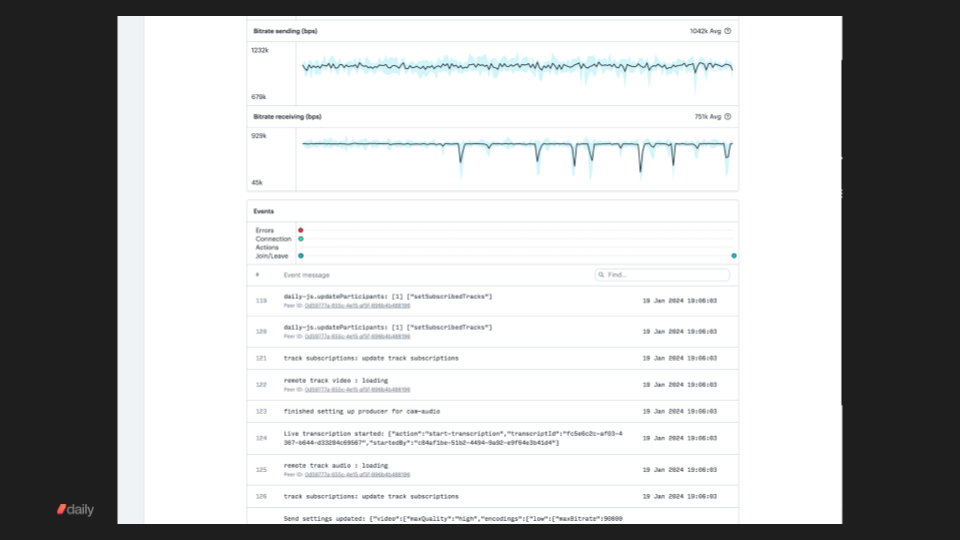

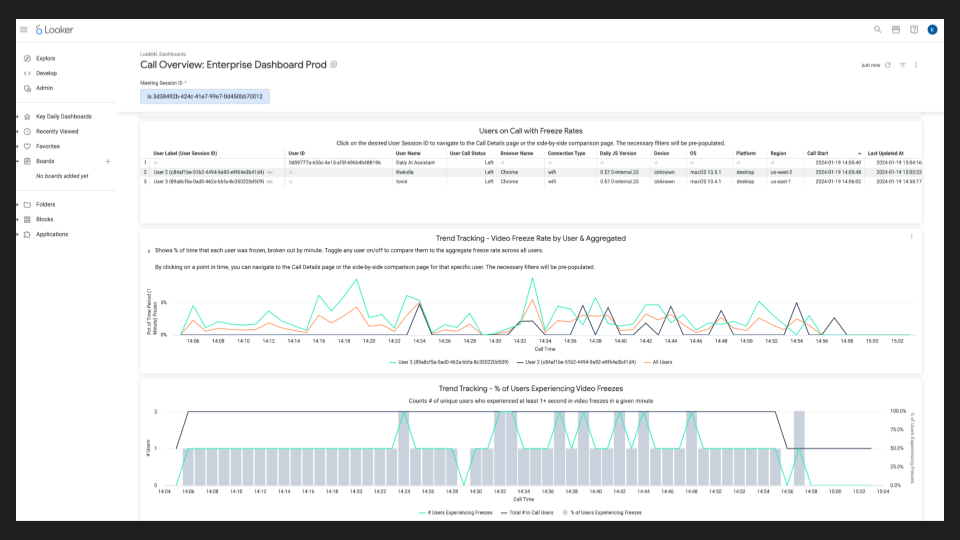

Part of delivering a reliable, best-possible-quality experience to users on any network, any device, anywhere in the world is having actionable metrics and monitoring for every session.

Observability and metrics

Good metrics and monitoring tools are important for two reasons. First, if you're not measuring quality and reliability, it's very hard to make improvements.

Second, you will want to provide customer support to users on an individual basis. So your support team needs tools that allow them to help users debug video issues.

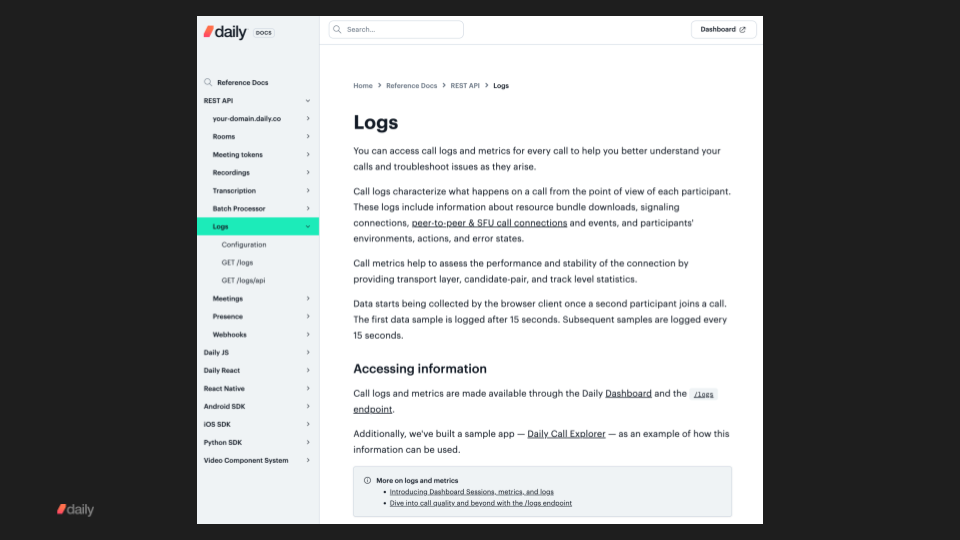

A video platform should give developers and product teams at least three things:

- easy-to-use dashboards

- REST API access to all metrics and SDK action logs

- enterprise tooling integrations

Here's how we check these three boxes at Daily.

Everyone on your team can use our standard dashboard, which gives you point-and-click access to logs and basic metrics from every session.

For customers with larger support and data science teams, our enterprise plans come with access to more complex dashboards built in the industry standard Looker BI tool. These Looker dashboards have more views, more aggregates, and are customizable for your team.

And all of the data that feeds our dashboards is available via our REST APIs.

Features and requirements

Features are important! So let's talk about how to do a feature comparison between vendors.

Every vendor will give you a feature checklist. If you're porting an existing application from Twilio Video, our recommendation is to – at least initially – narrow the focus to what you're using today.

I have to note that this advice cuts against one of our strengths at Daily — we have the biggest feature set of any video platform!

But here's why we recommend focusing first on the features you use today, rather than on your roadmap. We've seen over and over that customers will have an ambitious roadmap and will want to see every feature on that roadmap supported by a platform. Which is totally understandable.

However, roadmaps and requirements change over time. And a good platform, a platform that is under active development, will add new features every quarter.

So I think it makes sense to approach a features comparison exercise in two steps.

First, prioritize evaluating how difficult your port from Twilio Video will be. Are there any features missing that will require writing workarounds or changing your product? Because workarounds and product changes introduce real costs and risks.

Second, look at a vendor's history of adding new features. Talk to that vendor. Tell them your roadmap and try to get a sense of whether your roadmap aligns with the platform's roadmaps and commercial goals.

The reality is that Twilio Video's feature set is not very large. It's unlikely that you will have trouble porting a Twilio Video application to another established platform because of any missing features.

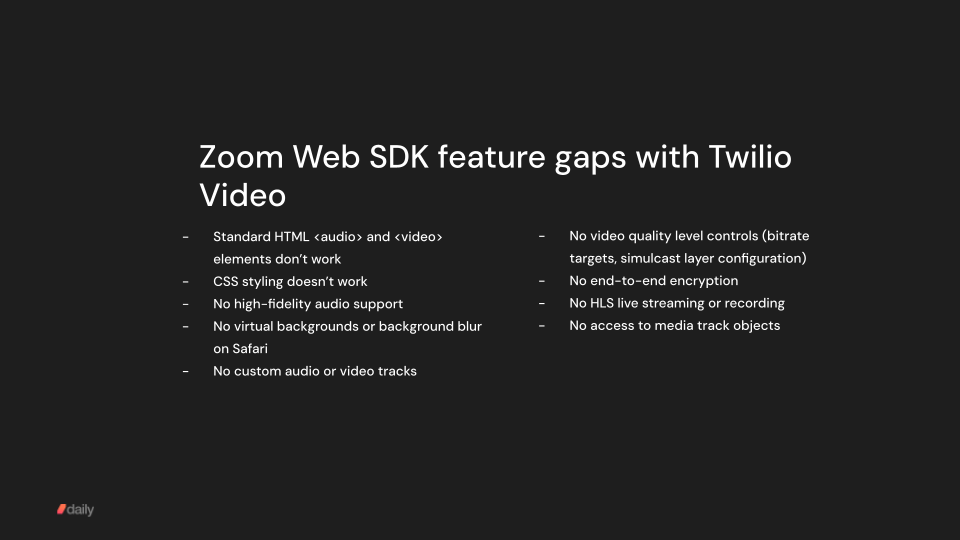

Zoom Web SDK: missing features

Ironically, the only established vendor that this is not true of is Zoom. Twilio recommended Zoom as a new home for Twilio's video customers. But Zoom's developer tools are much less mature than their consumer product, and in particular, Zoom's web browser SDK is missing many features.

Everyone in the video space respects Zoom's core technology and infrastructure. But almost everyone was surprised by the partnership with Zoom. Twilio has a history of being a developer-focused company. In this case, however, Zoom paid Twilio to recommend a solution that is not the right fit for most of Twilio's developer customers.

Compliance

Compliance is just as important as reliability, call quality, and features. But we've left it until next-to-last in the evaluation sequence, simply because in the video space all the established vendors are HIPAA-compliant, operate in ISO 27001 certified data centers, and offer data geo-fencing.

I think there are just two nuances to note here.

First, generally speaking, healthcare applications in the US can't use Agora. Agora is a strong player in the video space, but most enterprise compliance departments won't sign off on Agora handling healthcare video and audio traffic.

Agora's headquarters, executives, and engineering team are physically located in China. This means that the Chinese Government has access to all data that Agora handles, and even though Agora has self-certified as HIPAA compliant, Chinese laws about data privacy are generally considered to be incompatible with HIPAA.

The second nuance is that some applications have a hard requirement for true end-to-end encryption. Today, the only way to do true, auditable end-to-end encryption with WebRTC in a web browser is by using peer-to-peer calls. Peer-to-peer calls come with a number of quality disadvantages and feature limitations. For example, no recording or cloud transcription is possible in peer-to-peer calls.

In general, all established WebRTC vendors take data privacy seriously and use strong encryption for every leg of media transport. So a hard requirement for true end-to-end encryption is pretty unusual. But if your application does have this requirement, you'll need to talk to your vendor to make sure they support peer-to-peer calls.

Engineering support

Finally, support. Good engineering support will, for a lot of customers, make the difference between an easy port and smooth scaling as you grow, versus struggling to ship an application that works well for all users.

At Daily, we find that we can often accelerate implementation, testing, and scaling in three complementary ways.

First, we can often save you time by offering best practices and sample code. We have sample code repositories for many common use cases and features. In an average quarter, we publish a dozen or so tutorials and explainer posts on our blog.

Second, if your use case is anything other than 1:1 video calls, it's worth a quick conversation about how to tune your video settings to maximize quality and reliability for your use case. Daily supports use cases ranging from 1:1 calls, to social apps with 1,000 participants all moving around in a virtual environment, to 100,000-person real-time live streams. The best video settings for these different use cases are all a little bit different.

Third, we can often help you debug your application code, even if your problems aren't directly related to our APIs or real-time video. We've helped hundreds of customers scale on Daily and we've seen where the sharp edges are in front-end frameworks like React. We've seen where apps tend to hit scaling bottlenecks as they grow. And we've seen how Apple app store approvals work for apps that do video and audio. We want to be a resource for our customers. We want to save your product team time.

My advice here is to talk to a vendor's developer support engineers as much as you can during your eval process. We've sometimes had customers say to us, during their vendor evaluation, "we don't want to talk to you too much because we want to make an independent decision." I always tell them the same thing: you can certainly take everything we tell you with as big a grain of salt as you want to. But not talking to eng support just makes it impossible for you to evaluate one of the important things that you should be getting from a vendor, both early on as you build or port and over the long term as you scale. You should expect great developer support. And great support can save you meaningful amounts of time and help you make meaningful improvements to your customers' video experience.

So, that's it for the five major components of vendor evaluation: reliability, quality, features, compliance, and support.

Next we'll talk about planning your implementation and evaluating resources.

Planning and resource allocation

First, it's worth thinking through whether your team knows your existing Twilio Video implementation well, and if not, what to do about that.

If engineers on your team wrote or actively maintain your Twilio Video implementation, then you're in good shape.

You almost certainly want the engineers who know your current video code just to do the port. The APIs for all major video platforms other than Zoom are similar enough that your engineers will be able to translate their existing code to the new APIs in a pretty straightforward way.

On the other hand, if your current video code was written by someone who isn't on the team anymore, consider getting help from a consultant with Twilio Video experience.

Learning the Twilio Video APIs, how your code uses them, and the APIs of another platform is ... more work. It's certainly doable, but a good consultant will likely save you significant time.

We work with several good independent dev shops at Daily. And for Twilio Video customers operating at scale, we offer a $30,000 migration credit to offset the cost for you of making the transition to Daily.

Here's my rule of thumb — my starting point — for planning a migration. The implementation work involved in porting each major part of your video tech stack will be about 2 FTE weeks.

So, for example, you have a fairly standard 1:1 telehealth app feature set. You do real-time video, a little bit of in-call messaging, and of course you have a user flow that moves patients and providers into and out of the call. But you don't do any recording and you haven't built out any BI data or observability system integrations.

If your team knows your current video implementation, porting should take one engineer about two weeks, or two engineers that work well together about a week.

On the other hand, if your video feature set is complex and includes multiple different video use cases, including recording, and you've built out custom integrations at the metrics and data layer, then a port is likely to be much closer to six weeks of FTE work.

Implementing and testing

Once you have time from engineers — and maybe an engineering manager — blocked out, you can dive into writing code!

Here are two things that I think are very important. If you only remember two things from this presentation, these two things should be it!

- Initially, do a straight port of your video implementation, changing as little as possible. Don't add new features to your application during a port. Don't do any big architectural rewrites.

- Don't build an abstraction layer. Write directly against your platform's APIs.

This advice sometimes surprises people. So let's talk about both of these in a little more detail.

Over the past eight years at Daily, we've helped thousands of customers scale, and worked closely with dozens of those customers during their initial implementations.

The projects that have struggled to ship and scale have almost all either been ports combined with rewrites and new features, or projects that tried to build an abstraction layer to isolate application code from vendor APIs.

Don't port and rewrite application code

Combining a porting project with a rewrite is, almost always, a recipe for a slower, riskier implementation. The more code you change at one time, the harder it is to debug, QA, and evaluate.

Someone on your engineering team might argue that if you're touching all the video code anyway, you should clean it up and fix all the technical debt and little issues that are on the backlog.

But don't do it. Empirically, I can tell you that the fastest, lowest risk approach is port to a new platform while changing as little of your application code as possible. Make sure everything is working as expected in production. After that, turn your attention to architecture improvements and new features!

The challenge of abstraction layers

So, if you're writing new video code anyway, why not create an abstraction layer that makes it easy to switch between platforms down the road? It turns out that designing, building, debugging, and maintaining an abstraction layer is a lot more work than doing several ports. An abstraction layer sounds like a good idea. I've had this conversation with many product teams over the years.

But every single customer we've had who set out to build an abstraction layer has abandoned the effort, either before ever getting to production with the abstraction layer, or down the road to improve code maintainability.

Also, in addition to adding risk and time to a project, using an abstraction layer prevents you from leveraging the specific strengths of a video platform. This can be okay for very simple apps. But even for simple apps, using the lowest common denominator feature set across multiple vendors is not usually a path to success.

Testing a new video implementation

Now let's talk about testing your new video implementation.

In general, if you use our recommended settings at Daily, we can confidently tell you that your video and audio will be delivered reliably to users on a very wide range of real-world networks.

But that's because we do a whole lot of testing ourselves, all the time.

We also know, because we do a lot of competitive benchmarking, and we've helped a lot of customers port to Daily, that this same level of testing isn't done by every video platform.

So I think it's worth diving into the topic of testing, just a little bit. If your vendor doesn't do this testing, your team will need to.

First, it's important to test on simulated bad networks. Developers tend to have fast machines and good network connections.

Just because things work well for your engineering team, during development, does not mean that they will work well for all of your real-world users. Your platform vendor should be able to help you test your application on simulated bad network connections. You can also work with an independent testing service like testRTC.

Here are three basic tests that are worth doing regularly all the way along during development. Together, these three tests will help you make sure that your application is ready for production.

Networking testing: regular tests with real-world conditions

First, test regularly on a cellular data connection.

Second, test in a spot in your office or house that you know has sketchy wifi coverage.

And third, test consistently with a network simulation tool that can mimic a high packet loss, low throughput network connection.

This may seem like a lot, but you will have real-world users that match all of these profiles. In all of these cases, video should degrade gracefully and audio should remain clear.

Device testing

It's also important to test on a variety of real-world devices. Android phones, iPhones, older laptops. Again, your engineering team will likely have fast machines and – for browser-based apps – will tend to test on their laptops during development, not on their phones. But for telehealth, many of your users will be on mobile devices.

Load testing

Finally, it can be valuable to do load testing of your application. This is less of a concern when porting, because you know your app already works well in production. (This is, again, another reason to do a straight port, rather than a bigger rewrite, when moving between platforms. Less new code means less surface area that you will need to test from scratch.)

If you do want to do load testing, there are some unique things about testing video apps. Most load testing tools can't instantiate video sessions.

Here again you can get help from a video-focused test platform like testRTC. Daily also has a set of infrastructure features that make testing with automated and headless video clients easy, which we affectionately call our robot APIs.

Moving traffic

When you've tested internally and are ready to move production traffic, we recommend the following sequence:

- Set up monitoring

- Train your support staff

- Move 10 opt-in customer accounts

- While monitoring, move 10%, than half, then all of your traffic

You can obviously customize these steps to your particular needs and organizational best practices.

In our experience, you will usually find at least one or two bugs in step 3, while testing with a handful of accounts that have opted into being beta testers for your new video implementation. But, generally, if you've worked closely with your vendor to test on a variety of networks and devices, step 4 goes smoothly.

Enterprise firewalls

One wrinkle here is enterprise firewalls.

If you have customers who are behind locked-down enterprise firewalls, you've probably worked with your customers' IT staff to set up configurations that allow Twilio Video traffic on their networks. Getting approval for firewall changes, and implementing and testing those changes, can be time-consuming.

So if you will need your customers to make firewall changes, start this process early.

Twilio's STUN and TURN services are not going away. These two services are a big part of firewall traversal, and they are used by multiple Twilio core products (not just Twilio Video). Daily supports using Twilio STUN and TURN services in combination with Daily. This can minimize the need to make changes to firewall configurations. If you are evaluating multiple vendors, ask about keeping your Twilio STUN and TURN configuration.

Wrapping up

Timelines for migrating a Twilio video application

So how long will all of this take? Of course the most accurate answer is, "it depends."

But, as a rule of thumb, from start to finish a port usually takes between 1 and 3 months. For a typical telehealth app, you can roughly plan on 2 weeks for each of the four phases: vendor selection, implementation planning, implementation and testing, and moving traffic. Which adds up to two months.

We have customers who have ported to Daily and moved all traffic in under a week. We also have customers who have taken 18 months to port and move all traffic.

The big thing I want to leave you with today is that porting to a new vendor is not overwhelming — it's a very manageable process.

Closing thoughts

Break the porting process down into defined phases. And do a straight port, initially, changing as little code outside your video implementation as possible.

Finally, lean on your vendor. We're here to help. If you can't get good support from us when we're trying to win your business and help you move your traffic over, you probably won't get it later. Evaluate our engineering support just like you evaluate our infrastructure and feature set.

Real-time video is a small world and I know most people in our space. All of us — Daily and all our competitors — want every customer to succeed and want to over-deliver on engineering support.

Thanks for listening.

A Q&A followed the live webinar. If you are interested in the Q&A content, please watch the video embedded at the top of this post. For more content about migrating from Twilio Video, see our Migration Resources hub. Also feel free to contact us directly at help@daily.co, on Twitter, or by posting in our community forum.

VCS is the Video Component System, Daily's developer toolkit that lets you build dynamic video compositions and multi-participant live streams. We provide VCS in our cloud-based media pipeline so you can access it easily for recording and live streaming via Daily's API. There's also an open-source web renderer package that lets you render with VCS directly in your web app --- this way, you can use any combination of client and server rendering that best fits your app's needs.

Since its introduction on Daily as a beta feature last year, VCS has gained dozens of features based on requests and ideas from our customers. We're now getting ready to take off the beta label. That means the API and feature set are very close to stable, and it's a good time to take a look at all the new stuff that the platform has added.

What you're reading now is the first in a four-part series of posts covering new features in VCS. In this initial outing, we'll be looking at two new overlay graphics options for richer dynamic visuals. We'll see how a new "highlight lines" data source can be used to provide content to the various graphics overlays. We'll also look at ways to use content behind video elements. You can now use VCS WebFrame as a full-screen live background, which can make it easier to port your existing web-based UIs to VCS for recording and streaming.

So, this first post is about new tools and options for motion graphics and background content. The upcoming second post will focus on customizing video elements and their layout, and will also explain some new debugging tools related to rendering Daily rooms in VCS.

The third post in the series will cover new ways to link data sources into a VCS composition. These will let you render chat, transcript, and emoji reactions automatically. Finally, to conclude this series, we'll be unveiling new open-source libraries and code releases that make it possible to use VCS in your own real-time apps and even offline media pipelines and AI-based automation. So it's going to be worth checking back here regularly!

New graphics overlays

Before we look at the new graphics options, let's have a quick refresher. VCS is a React-based toolkit designed specifically for video compositions. It is open source. There are two parts to the project: the core and the compositions.

The VCS Core project interfaces with the React runtime and provides built-in components like Image, Video, Text, and so on. But it's just a framework. To render something, you need a program that uses VCS. These programs are called compositions.

You could write your own composition from scratch using the VCS tools, but to make life easier, we at Daily provide a baseline composition which includes a rich set of layout and graphics features. When you start a recording or live stream on Daily's cloud, the baseline composition is enabled by default. You can control it by sending parameter values for things like changing layout modes and toggling graphics overlays.